The rise of specialized computing is bringing powerful new hardware into the data center. This is a trend we first noted last year, and has come into sharp focus in recent weeks with a flurry of announcements of new chips and servers. Much of this new server hardware offers data-crunching for artificial intelligence and other types of high-performance computing (HPC), or more powerful and efficient gear for traditional workloads.

Some of this new hardware is already being deployed in cloud data centers, bringing new capabilities to users looking to leverage the cloud for machine learning tasks or HPC. In some cases, these new offerings will factor into server refresh plans for companies operating their own data centers, even as the industry awaits the release of new products from Intel later this year.

One thing is clear: Innovation is alive and well in the market for data center hardware, with active contributions from hyperscale players, open hardware projects and leading chip and server vendors. Here’s an overview of the new hardware offerings from Google, NVIDIA, AMD, ARM, Intel and Microsoft.

Google Offers on-Demand Access to Cloud TPUs

Google’s in-house technology sets a high bar for other major tech players seeking an edge using AI to build new services and improve existing ones. Thus, Google’s May 17 announcement of a new version of its Tensor Processing Unit (TPU) hardware made major waves in the AI world. Google will offer the new chips as a commercial offering on Google Cloud Platform.

The TPU is a custom ASIC tailored for TensorFlow, an open source software library for machine learning that was developed by Google. An ASIC (Application Specific Integrated Circuit) is a chip that can be customized to perform a specific task. Recent examples of ASICs include the custom chips used in bitcoin mining. Google has used its TPUs to squeeze more operations per second into the silicon.

The new TPU 2.0 brings impressive performance and supports both categories of AI computing training and inference. In training, the network learns a new capability from existing data. In inference, the network applies its capabilities to new data, using its training to identify patterns and perform tasks, usually much more quickly than humans could. These two tasks usually require different types of hardware, but Google says its newest TPU has surmounted that challenge.

“Each of these new TPU devices delivers up to 180 teraflops of floating-point performance,” Google executives Jeff Dean and Urz Holzle said in a blog post. “As powerful as these TPUs are on their own, though, we designed them to work even better together. Each TPU includes a custom high-speed network that allows us to build machine learning supercomputers we call ‘TPU pods.’ A TPU pod contains 64 second-generation TPUs and provides up to 11.5 petaflops to accelerate the training of a single large machine learning model. That’s a lot of computation!”

A Google “TPU pod” built with 64 second-generation TPUs delivers up to 11.5 petaflops of machine learning acceleration. (Photo: Google)

“With Cloud TPUs, you have the opportunity to integrate state-of-the-art ML accelerators directly into your production infrastructure and benefit from on-demand, accelerated computing power without any up-front capital expenses,” said Holzle and Dean. “Since fast ML accelerators place extraordinary demands on surrounding storage systems and networks, we’re making optimizations throughout our Cloud infrastructure to help ensure that you can train powerful ML models quickly using real production data.”

NVIDIA Unveils Volta GPU Architecture

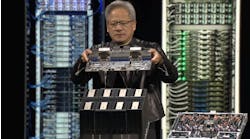

One of the other key players in AI hardware is NVIDIA, which rolled out its long-anticipated Volta GPU computing architecture on May 10 at its GPU Technology Conference. The first Volta-based processor is the Tesla V100 data center GPU, which brings speed and scalability for AI inferencing and training, as well as for accelerating HPC and graphics workloads.

NVIDIA founder and CEO Jensen Huang introduces the company’s new Volta GPU architecture at the GPU Technology Conference in Las Vegas. (Photo: NVIDIA Corp.)

“Artificial intelligence is driving the greatest technology advances in human history,” said Jensen Huang, founder and chief executive officer of NVIDIA, who unveiled Volta at his GTC keynote. “It will automate intelligence and spur a wave of social progress unmatched since the industrial revolution.

Volta, NVIDIA’s seventh-generation GPU architecture, is built with 21 billion transistors and delivers a 5x improvement over Pascal, the current-generation NVIDIA GPU architecture, in peak teraflops. NVIDIA says that by pairing CUDA cores and the new Volta Tensor Core within a unified architecture, a single server with Tesla V100 GPUs can replace hundreds of commodity CPUs for traditional HPC.

The arrival of Volta was welcomed by several of NVIDIA’s largest customers.

“NVIDIA and AWS have worked together for a long time to help customers run compute-intensive AI workloads in the cloud,” said Matt Garman, vice president of Compute Services for Amazon Web Services. “We launched the first GPU-optimized cloud instance in 2010, and introduced last year the most powerful GPU instance available in the cloud. AWS is home to some of today’s most innovative and creative AI applications, and we look forward to helping customers continue to build incredible new applications with the next generation of our general-purpose GPU instance family when Volta becomes available later in the year.”

With EPYC, AMD Targets Server Market

Specs for the new AMD EPYC processors.

Weeks after unveiling its new Ryzen family of PC chips, AMD introduced its new offerings for the data center. The EPYC processor, previously codenamed “Naples,” delivers the Zen x86 processing engine scaling up to 32 physical cores2. The first EPYC-based servers will launch in June with widespread support from original equipment manufacturers (OEMs) and channel partners.

“With the new EPYC processor, AMD takes the next step on our journey in high-performance computing,” said Forrest Norrod, senior vice president and general manager of Enterprise, Embedded & Semi-Custom Products. “AMD EPYC processors will set a new standard for two-socket performance and scalability. We believe that this new product line-up has the potential to reshape significant portions of the datacenter market with its unique combination of performance, design flexibility, and disruptive TCO.”

AMD was once a major player in the enterprise and data center markets with its Opteron processors, particularly in 2003-2008, but then lost ground to a resurgent Intel. AMD sought to shake things up in 2011 with its $334 million acquisition of microserver startup SeaMicro, but by 2015 it had retired the SeaMicro servers and gone back to the drawing board.

Securities analysts have been impressed with AMD’s server prospects with the EPYC processors, and at one point AMD shares surged on rumors that it would license its technology to old rival Intel (which turned out to be untrue). There are some signs that EPYC is at least getting a look from the type of web-scale customers that are critical for server success, as Dropbox is among the companies evaluating AMD’s new processors.

New Processors Reflect AI Ambitions for ARM

There has long been curiosity about whether low-power ARM processors could slash power bills for hyperscale data centers. Those hopes have led to repeated disappointments. That may be changing, as Microsoft has given a major boost to the nascent market for servers powered by low-energy processors from ARM, which are widely used in mobile devices like iPhones and iPads.

Building on that momentum, ARM is targeting the market for AI computing. At this week’s Computex electronics show in Taiwan, ARM has announced two new processors – the Cortex-A75 high-performance processor and Cortex-A55 high-efficiency processor. Both are built for DynamIQ technology, ARM’s new multi-core technology announced in March 2017. The Cortex-A75 brings a brand-new architecture that boosts processor performance, while Cortex-A75 CPU will expand the capabilities of the CPU to handle advanced workloads.

An overview of new processor technology from ARM.

ARM is not looking to go head-to-head with NVIDIA and Intel on training workloads in the data center. ARM’s focus is on mobile devices, where it has been a dominant player, and is positioning its new chips to power AI processing on these edge devices.

“A cloud-centric approach is not an optimal long-term solution if we want to make the life-changing potential of AI ubiquitous and closer to the user for real-time inference and greater privacy,” writes Nandan Nayampally on the ARM blog. “ARM has a responsibility to rearchitect the compute experience for AI and other human-like compute experiences. To do this, we need to enable faster, more efficient and secure distributed intelligence between computing at the edge of the network and into the cloud.”

What About Intel? Microsoft Refines FPGAs, More to Come

As its competitors roll out new hardware, market leader Intel is preparing to unveil new server offerings later this year to update the Intel Xeon Processor Scalable Family, the chipmaker’s new brand for its data center offerings. These include:

- The highly-anticipated Lake Crest ASIC technology, which Intel acquired through its Nervana acquisition, will come to market this year. Nervana is developing an ASIC that it says has achieved training speeds 10 times faster than conventional GPU-based systems and frameworks. The company says it has built a better interconnect, allowing its Nervana Engine to move data between compute nodes more efficiently.

- The newest Intel “Skylake” Xeon processors, arriving later this year, are being reorganized under the Scalable family, a move designed to position Intel for an evolving IT landscape in which data center operators are seeking to match hardware to new workloads. Intel also will introduce offer four levels of performance and capabilities, grouped in a tiered model based on metals (bronze, silver, gold and platinum) to make the options simple and efficient to choose.

Intel’s Jason Waxman shows off a server using Intel’s FPGA accelerators with Microsoft’s Project Olympus server design during his presentation at the Open Compute Summit. (Photo: Rich Miller)

In the meantime, Intel has been making the case for field programmable gate arrays (FPGAs) as AI accelerators. FPGAs are semiconductors that can be reprogrammed to perform specialized computing tasks, allowing users to tailor compute power to specific workloads or applications. Intel acquired new FPGA technology in its $16 billion acquisition of Altera in 2016.

The flagship customer for FPGAs has been Microsoft, which last year began using Altera FPGA chips in all of its Azure cloud servers to create an acceleration fabric, an outgrowth of Microsoft’s Project Catapult research.

At last month’s Microsoft Build conference, Azure CTO Mark Russinovich disclosed major advances in Microsoft’s hyperscale deployment of Intel FPGAs, outlining a new cloud acceleration framework that Microsoft calls Hardware Microservices. The infrastructure used to deliver this acceleration is built on Intel FPGAs. This new technology will enable accelerated computing services, such as Deep Neural Networks, to run in the cloud without any software required, resulting in large advances in speed and efficiency.[clickToTweet tweet=”Doug Burger: Microsoft is continuing to invest in novel hardware acceleration infrastructure using Intel FPGAs.” quote=”Doug Burger: Microsoft is continuing to invest in novel hardware acceleration infrastructure using Intel FPGAs.”]

“Microsoft is continuing to invest in novel hardware acceleration infrastructure using Intel FPGAs,” said Doug Burger, one of Microsoft’s Distinguished Engineers.

“Application and server acceleration requires more processing power today to handle large and diverse workloads, as well as a careful blending of low power and high performance—or performance per Watt, which FPGAs are known for,” said Dan McNamara, corporate vice president and general manager, Programmable Solutions Group, Intel. “Whether used to solve an important business problem, or decode a genomics sequence to help cure a disease, this kind of computing in the cloud, enabled by Microsoft with help from Intel FPGAs, provides a large benefit.”