Google is shifting to liquid cooling with its latest hardware for artificial intelligence, as the heat generated by its new Tensor Processing Units (TPUs) has exceeded the limits of its previous data center cooling solutions.

In the keynote address at the IO18 developer conference, Google CEO Sundar Pichai showed off the TPU 3.0, featuring a server motherboard with tubing connected to chip assemblies.

“These chips are so powerful that for the first time, we’ve had to introduce liquid cooling in our data centers,” said Pichai. “We’ve put these chips in the form of giant pods. Each of these pods is now eight times more powerful than last year’s (TPUs), well over 100 petaflops.”

The TPU is a custom ASIC tailored for TensorFlow, an open source software library for machine learning that was developed by Google. An ASIC (Application Specific Integrated Circuit) is a chip that can be customized to perform a specific task. Recent examples of ASICs include the custom chips used in bitcoin mining. Google has used its TPUs to squeeze more operations per second into the silicon.

Google said its third-generation designs include the TPUv3 ASIC chip, a TPUv3 device (presumably the board displayed at IO8) and a TPUv3 “system” consisting of an eight-rack pod.

Early Sign of AI Driving Data Center Design

Google’s adoption of liquid cooling at scale is likely a sign of things to come for hyperscale data centers. The rise of artificial intelligence, and the hardware that often supports it, is reshaping the data center industry’s relationship with power density.

New hardware for AI workloads is packing more computing power into each piece of equipment, boosting the power density – the amount of electricity used by servers and storage in a rack or cabinet – and the accompanying heat. The trend is challenging traditional practices in data center cooling, and prompting data center operators to adapt new strategies and designs.

Google is on the bleeding edge of data center technology, customizing virtually all elements of its operations, from the chips right up to the building design. However, many of Google’s best practices soon make their way into other hyperscale data centers, and sometimes even into the enterprise.

It’s also worth noting that Google’s in-house technology sets a high bar for other major tech players seeking an edge using AI to build new services and improve existing ones. The processing power in the latest TPUs will push Google’s rivals to keep pushing the power envelope for their AI hardware – which in turn means more electricity, more heat and more cooling. Building a more powerful AI chip allows Google to do more with its artificial intelligence technology, as noted by Pichai in his keynote.

“This is what allows us to develop better models, large models, more accurate models, and help us tackle even larger problems,” said Pichai.

With most of the large tech companies investing heavily in AI, we’re likely to see liquid cooling very soon.

Piping Brings Coolant to the Chip

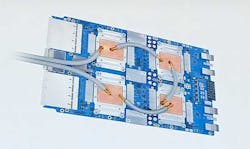

An image of Google’s new TPU 3.0, showing the piping to support liquid cooling. (Image: YouTube)

Google didn’t immediately release a technical description of the TPU 3.0 cooling system. But images displayed by Pichai during his keynote show a TPUv3 device design that brings liquid to the chip, with coolant delivered to a cold plate sitting atop each TPUv3 ASIC chip, with four chips on each motherboard. Each plate has two pipes attached, indicating that the coolant is circulating through the tubing and the cold plates, which removes heat from the chips.

Google says each device has 128 GB of high-bandwidth memory, twice the memory of the predecessor TPUv2 device.

Bringing liquid directly to the chip allows Google to cool more compute-intense workloads, and also offers the potential for extreme efficiency as well. Unlike the room-level air cooling seen in many data centers, this type of design benefits from a tightly-controlled environment that focuses the cooling as close as possible to the heat generating components. This could allow the server design to use a higher inlet temperature for the water, which can be extremely efficient, or maintain the liquid at a cooler temperature in order to handle more powerful chips.

A Google TPUv3 system, which sonsists of eight racks of liquid-cooled devices featuring Google’s custom ASIC chips for machine learning. Click the image to see a larger version. (Image: Google)

The “pods” mentioned by Pichai are rows of eight cabinets, each filled with Tensor Processing units. Each cabinet has 16 sleds of IT gear, with network switches at the top of the rack. An enclosed area at the bottom of each cabinet likely houses in-rack cooling infrastructure, which could be a coolant distribution unit (CDU) that is commonly used to pump liquid cooling, or even a small cooling unit featuring a refrigerant.

For more insights on this trend, here’s some of Data Center Frontier’s coverage of trends in high-density cooling.