Cerebras Unveils Six Data Centers to Meet Accelerating Demand for AI Inference at Scale

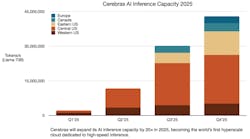

Putting a stake in the ground to define its role in artificial intelligence, AI accelerator developer Cerebras announced they would be adding six new data centers to their global AI inference network, expanding capacity 20X to create the leading domestic high-speed inference cloud with 85% of the capacity in the United States.

The inference cloud network will be able to serve over 40 million Llama 70B tokens per second.

Inference networks are typically used in real-time AI applications, where pre-trained models make predictions, classify data, detect patterns, or generate outputs based on input data.

This is different from typically discussed AI training: the inference network makes use of the trained AI model provided to serve the real-time needs of the customer.

Cerebras AI Inference Data Centers:

- Santa Clara, CA (online)

- Stockton, CA (online)

- Dallas, TX (online)

- Minneapolis, MN (Q2 2025)

- Oklahoma City, OK (Q3 2025)

- Montreal, Canada (Q3 2025)

- Atlanta, GA (Q4 2025)

- France (Q4 2025)

Six of the data centers will be operated by Cerebras partner G42, who in 2023 worked with Cerbras to launch the Condor Galaxy AI supercomputer, with an AI training capacity of 36 exaFlops.

The AI hardware in the separately owned Oklahoma City and Montreal datacenters (more on this below) is hardware owned and operated by Cerebras.

According to Dhiraj Mallick, COO, Cerebras Systems:

Cerebras is turbocharging the future of U.S. AI leadership with unmatched performance, scale and efficiency – these new global datacenters will serve as the backbone for the next wave of AI innovation. With six new facilities coming online, we will add the needed capacity to keep up with the enormous demand for Cerebras industry-leading AI inference capabilities, ensuring worldwide access to sovereign, high-performance AI infrastructure that will fuel critical research and business transformation.

The Cerebras CS-3 System: A Powerful AI Accelerator

These data centers will be deploying the Cerebras CS-3 Wafer Scale Engine -based AI accelerator. The Wafer Scale Engine is a unique and powerful AI accelerator developed by Cerebras Systems. Unlike traditional AI chips, which are typically built as smaller individual silicon dies, the WSE is a single, massive chip covering almost an entire silicon wafer. This wafer-scale integration makes it the largest chip ever built and offers significant computational capabilities.

Each WSE has 850,000 AI optimized cores that have been specifically designed for deep learning workloads. There is 40 GB of SRAM directly on the chip, rather than depending on external memory, and the chip boasts 20 petabytes per second of memory bandwidth, allowing for extremely fast data movement. AI models are processed on the single, huge wafer, which simplifies software and hardware management when compared to massive, interconnected networks of AI GPUs.

Taking On the AI Hyperscalers

Referencing popular reasoning models such as OpenAI o3 and DeepSeek R1, Cerbras highlights the fact that using these models can take minutes to generate an answer, but that Cerbras makes a fundamental business change by speeding up response time tenfold. Perplexity, the AI search engine that has been giving Google a run for its money, currently uses Cerebras technology to provide its instant search results.

While Cerebras has stated that the final location of the data centers may be subject to change, at least two of the data center locations, and their launch dates, were specifically identified the announcement. June 2025 will see more than 300 Cerebras CS-3 systems come online in Scale Data Center in Oklahoma City. Cerebras will be taking advantage of the Tier 3+ data center and its custom water-cooled solution with the company believes makes “uniquely suited” for the deployment of the WSE-based servers.

Trevor Francis, CEO of Scale Datacenter, said:

We are excited to partner with Cerebras to bring world-class AI infrastructure to Oklahoma City. Our collaboration with Cerebras underscores our commitment to empowering innovation in AI, and we look forward to supporting the next generation of AI-driven applications.

Meanwhile, in July of this year, Cerebras expects their Montreal data center, run by Enovum, to be fully operational. This will be the first deployment of the WSE at scale in the Canadian tech business world. According to Billy Krassakopoulos, CEO of Enovum Datacenter:

Enovum is thrilled to partner with Cerebras, a company at the forefront of AI innovation, and to further expand and propel Canada’s world-class tech ecosystem. This agreement enables our companies to deliver sophisticated, high-performance colocation solutions tailored for next-generation AI workloads.

Cerebras expects to be offering hyperscale-level services to their customers by Q3 of 2025.

6 Key Adjacent Data Center Industry Developments in Light of Cerebras’s New AI Acceleration Data Center Expansion

Cerebras Systems’ announcement of six new U.S. data center sites dedicated to AI acceleration has sent ripples across the data center and AI industries. As the demand for AI compute capacity continues to surge, this move underscores the growing importance of specialized infrastructure to support next-generation workloads.

Here are six important adjacent and competitive developments in the data center industry that are shaping the landscape in light of Cerebras’s expansion.

1. Hyperscalers Doubling Down on AI-Optimized Data Centers

Major cloud providers like Google, AWS, and Microsoft Azure are rapidly expanding their AI-optimized data center footprints. These hyperscalers are investing heavily in GPU- and TPU-rich facilities to support generative AI, large language models (LLMs), and machine learning workloads. Cerebras’s move highlights the competitive pressure on hyperscalers to deliver low-latency, high-performance AI infrastructure.

2. Specialized AI Hardware Ecosystems Gaining Traction

Cerebras’s Wafer-Scale Engine (WSE) technology is part of a broader trend toward specialized AI hardware. Competitors like NVIDIA (with its Grace Hopper Superchips and DGX systems) and AMD (with its Instinct MI300 series) are also pushing the envelope in AI acceleration. This arms race is driving demand for data centers designed to accommodate these unique architectures, including advanced cooling and power delivery systems.

3. Liquid Cooling Adoption Accelerates

The power density of AI workloads is forcing data center operators to rethink cooling strategies. Cerebras’s systems, known for their high compute density, will likely require liquid cooling solutions. This aligns with industry-wide adoption of liquid cooling technologies by companies like Equinix, Digital Realty, and EdgeConneX to support AI and HPC workloads efficiently.

4. Regional Data Center Expansion for AI Workloads

Cerebras’s choice to establish six new U.S. sites reflects a growing trend toward regional data center expansion to meet AI compute demands. Companies like CyrusOne, QTS, and Vantage Data Centers are also strategically building facilities in secondary markets to support AI and edge computing, ensuring low-latency access for enterprises and researchers.

5. Energy and Sustainability Challenges Intensify

The power requirements of AI-optimized data centers are staggering, with facilities often consuming 20-50MW or more. Cerebras’s expansion highlights the need for sustainable energy solutions, including renewable power procurement and advanced energy management systems. Competitors like Switch and Iron Mountain are leading the charge in building carbon-neutral data centers to address these challenges.

6. Partnerships Between AI Hardware and Data Center Providers

Cerebras’s announcement may signal deeper collaborations between AI hardware innovators and data center operators. Similar partnerships, such as NVIDIA’s work with CoreWeave and Lambda Labs, are becoming increasingly common as the industry seeks to integrate cutting-edge AI technologies into scalable, operational environments. Expect more joint ventures and alliances to emerge as AI infrastructure demands grow.

Conclusion

Cerebras’s expansion into six new U.S. data center sites for AI acceleration is a significant milestone that reflects the broader transformation of the data center industry. As AI workloads dominate the demand for compute, the industry is responding with specialized hardware, innovative cooling solutions, and strategic regional expansions. These developments underscore the critical role of data centers in enabling the next wave of AI innovation, while also highlighting the challenges of power, sustainability, and competition in this rapidly evolving space.

At Data Center Frontier, we talk the industry talk and walk the industry walk. In that spirit, DCF Staff members may occasionally enhance content with AI tools such as deepseek.

Keep pace with the fast-moving world of data centers and cloud computing by connecting with Data Center Frontier on LinkedIn, following us on X/Twitter and Facebook, as well as on BlueSky, and signing up for our weekly newsletters using the form below.

David Chernicoff

Matt Vincent

A B2B technology journalist and editor with more than two decades of experience, Matt Vincent is Editor in Chief of Data Center Frontier.