The rapid expansion of AI and high-performance computing (HPC) has significantly increased the density and complexity of data centers. A 2024 Goldman Sachs analysis indicates that AI workloads can demand 10x the power of traditional servers, posing new challenges in power consumption, cooling efficiency, hardware reliability, and sustainability.

Traditional Data Center Infrastructure Management (DCIM) solutions primarily focus on facility-wide metrics but often lack granular, per-device insights. Server management solutions, on the other hand, are mostly limited to servers from the same manufacturer. Finally, agent-based solutions offer deep workload and application-level insights but can be difficult to deploy, introducing potential inaccuracies, security risks, and performance overhead.

A non-intrusive, IT-centric, and heterogeneous approach is therefore essential to ensure infrastructure efficiency, manage operational costs, and extend hardware lifespan. This requires visibility into device-level power consumption, cooling dynamics, and component health across multi-vendor environments.

The Growing Challenges of AI Data Centers

Power and Energy Efficiency

AI infrastructure dramatically increases rack power requirements and overall energy consumption. Traditional monitoring approaches, which focus on facility-wide PUE metrics, fail to monitor and manage power at the rack and device level. Without this visibility, data centers risk inefficient energy distribution, rising operational costs, and challenges in scaling capacity, performance, and sustainability.

According to Uptime Institute’s Global Data Center Survey for 2024, nearly half of respondents primarily worked with facilities over 11 years old. For these environments, managing infrastructure health, power consumption, and cooling is critical.

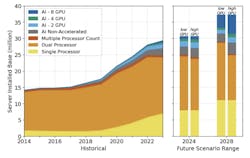

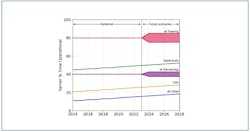

Moreover, the 2024 Report on U.S. Data Center Energy Use produced by Lawrence Berkeley National Laboratory (LBNL) indicates that while AI workloads are growing, conventional servers still represent a significant portion of data center infrastructure as illustrated in Figure 1. The report also illustrates that while AI servers operate at 80-90% utilization, non-AI servers often run below 60% as shown in Figure 2.

This means that identifying, consolidating, and optimizing underutilized conventional servers remains essential for improving power distribution and resource allocation. This not only reduces energy waste but also improves space usage and overall operational performance.

As energy costs rise and environmental regulations become stricter, data centers must also focus on improving sustainability and adhering to evolving legislation, such as the EU Energy Efficiency Directive (EED). This requires granular energy monitoring, optimized workload distribution, and intelligent power management strategies to ensure compliance while minimizing environmental impact.

GPU and AI Infrastructure Reliability

AI relies heavily on high-performance GPUs, which are prone to failures from thermal stress, aging components, and excessive utilization in dense AI clusters. A single faulty GPU in a densely packed AI cluster can disrupt critical workloads, leading to unexpected downtime that can be very costly.

Meta’s paper titled The Llama 3 Herd of Models published in 2024 indicated that during a 54-day period of Llama 3 405B pre-training, about 78% of unexpected interruptions were attributed to confirmed or suspected hardware issues. If GPUs fail at this same rate on average, their annualized failure rate would be 9%, and in 3 years it will be about 27%.

Without real-time GPU power, thermal, health, and utilization monitoring, failures may go undetected until they impact performance. Proactive maintenance can help mitigate risks by identifying anomalies and failure indicators before they become catastrophic.

Beyond hardware failures, staggered device purchases often lead to mismatched firmware versions across identical hardware. This can impact reliability, performance, and security. Centralized firmware management can enforce consistent firmware versions across GPU clusters and ensure optimal performance and reliability.

Liquid Cooling and Thermal Management

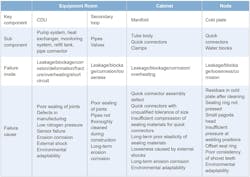

EPRI’s May 2024 whitepaper indicates that cooling systems account for 30–40% of total data center energy consumption. With AI racks exceeding 40 kW, traditional air-cooling methods are no longer sufficient. As a result, direct-to-chip cooling and immersion cooling have become essential for managing these extreme power densities. However, these advanced cooling methods introduce new challenges, particularly in monitoring coolant flow rates, pump efficiency, pressure levels, and leak detection. Figure 3 from an XFusion whitepaper below highlights some of the key cold-plate liquid cooling components and their failure modes.

Liquid leaks in data centers pose severe risks to hardware resulting in substantial downtime and financial losses. For example, a cooling system water pump failure that caused water to leak into the battery room at Global Switch’s Paris data center caused a fire that disrupted Google services across Europe.

To prevent such situations, real-time thermal and health monitoring and analytics, as well as effective Cooling Distribution Unit (CDU) management are necessary.

Bridging the Gaps with IT-Centric Data Center Management

To address these challenges, data centers must adopt a hybrid management solution that supports multiple devices and architectures, is vendor-agnostic, and provides device and component-level monitoring alongside broader infrastructure controls. This enables data centers to scale efficiently while controlling energy costs.

Key capabilities of an effective solution include:

- Multi-vendor and heterogeneous hardware support – Ensuring seamless management across a diverse set of devices by different manufacturers.

- Real-time device and component level health monitoring – Ensuring performance stability across AI clusters and HPC nodes.

- Per-device power and cooling analysis – Optimizing thermal efficiency and preventing power bottlenecks.

- Firmware management – Enhancing hardware resilience and security compliance.

- Sustainability management – Aligning power consumption with environmental impact goals (PUE, CUE, carbon reporting).

These capabilities are essential for enhancing operational efficiency, mitigating risks, and ensuring long-term sustainability. As AI and HPC drive unprecedented workloads, intelligent, IT-centric data center management will be critical to balancing performance, reliability, and cost-effectiveness in the evolving landscape.

Rami Radi

Rami Radi is a Senior Product Manager and Solution Architect at AMI who is passionate about bridging hardware and software to create solutions that address the evolving needs of modern IT environments. These solutions enable organizations to enhance reliability, reduce costs, streamline operations, and meet their sustainability goals. At AMI, Rami drives the long-term strategic roadmap for data center management solutions by collaborating with customers, partners, and industry leaders. He focuses on delivering innovative solutions that provide advanced capabilities that align with emerging trends, and meet the demands of both current and future dense, heterogeneous data centers.

AMI is Firmware Reimagined for modern computing. As a global leader in Dynamic Firmware for security, orchestration, and manageability solutions, AMI enables the world’s compute platforms from on-premises to the cloud to the edge. AMI’s industry-leading foundational technology and unwavering customer support have generated lasting partnerships and spurred innovation for some of the most prominent brands in the high-tech industry. For more information, visit www.ami.com.