Immersion Cooling: Top 5 Design and Deployment Considerations

We continue our article series on the fundamental shift that's taking place in the way the data center industry approaches cooling. This week, we'll explore some of the new advancements in immersion cooling.

It’s no wonder immersion cooling has gained traction within the data center industry. Modern designs do not require leaders to replace their existing solutions. Rather, immersion cooling can sit alongside air-cooled and other types of infrastructure. However, there is a crucial reason why many are looking more closely at immersion cooling for a growing number of use cases. Energy efficiency and optimizing overall data center performance is a critical design concern.

New technologies like solid-state have different operating temperatures than their spinning disk counterparts, and this is also true for new use cases around converged systems, HPC, supercomputing, and others. The latest metrics show that regularly operating in the recommended 64.4F and 80.6F range, based on the ANSI/ASHRAE Standard 90.4-2016, will keep your data center nice and healthy. But what about efficiency?

Furthermore, keeping your data center operating at a lower-than-necessary temperature may not help performance. Instead, you’re just keeping the temperature and paying for it with no added value.

DESIGN CONSIDERATIONS AND WORKING WITH IMMERSION COOLING

With all this in mind, let’s explore the five key considerations when considering immersion cooling.

1. Legacy infrastructure. To retrofit or not. If your infrastructure is aging, you must look beyond immersion cooling to bring your data center up to par. However, legacy infrastructure doesn’t have to be old to be a challenge. With new use cases around HPC and high-density computing, leaders need more than blowing air to support density.

New components can be deployed parallel to legacy IT to ensure that the transition or integration is least disruptive and beneficial to IT, users, and businesses. Here is an important note for adoption. The evolution of chips has gone from large to small (for handheld devices, for example) and now back to large within the data centers. The heat generation is increasing quickly by returning to chips with more dense core capacity, PCIe lanes, and capacity. This density will only grow with new solutions like AI, ML, and now ChatGPT. As new and updated technology is added, adding and integrating immersion cooling would be the least disruptive, cost-efficient, and beneficial.

This integration or transition can be done via management tools, systems integration, and even parallel build-out of new use cases and deployments. Most of all – this does not have to be a complicated process, and the entire process can be very empowering for all associates, IT leaders, and business stakeholders. By integrating and evolving from legacy IT, you learn more about your organization and the capabilities you require to support an emerging market.

That being said, there are a few key considerations. First, there is a chance that a retrofit will make your data center far more complex. A retrofit could work if you already have a few vendors in your data center and manage the infrastructure well. However, in some cases, you’ll need to look at advanced retrofits to reduce the number of vendors in your facility. In other cases, you will need to explore immersion-born designs.

2. When the airflow is no longer enough. As data center airflow management reached mainstream status in the past few years, the evolution of this field has focused on fine-tuning all the developments of the preceding decade.

So – what’s changed? During the ‘90s and mid-2000s, designers and operators worried about the ability of air-cooling technologies to cool increasingly power-hungry servers. With design densities approaching or exceeding 5-10 kilowatts (kW) per cabinet, some believed that operators would have to resort to rear-door heat exchangers and other in-row cooling mechanisms to keep up with the increasing densities. At this point, many realized that traditional airflow might not be enough for AI, HPC, and other data-driven workloads.

At the data center level, improvements to individual systems entail the implementation of accelerator processors. More specifically, graphics processing units (GPUs), application- specific integrated circuits (ASICs), and field- programmable gate arrays (FPGAS). Today’s HPC and AI systems are highly dense. Depending on the design, traditional air-cooled solutions might not handle the density you need, requiring you to spread the workload and utilize more data center floor space. Or, you could turn to immersion cooling. In data center design, immersion cooling can increase server density by 10x or more, handling up to 144 Nodes, 288 CPUs, and 98kW per 48U cooling tank.

3. Designing around efficiency and performance. Everyone wants to be as efficient as possible. However, the challenge is ensuring that you’re not losing cooling power as you try to save on energy costs. During the evolution of liquid cooling, we saw direct-to-chip liquid cooling solutions. Today, high-density, modular single-phase immersion cooling solutions have all the necessary compute, network, and storage components. As mentioned earlier, liquids are far more efficient at removing heat than air cooling. For use cases where efficiency and performance are critical, purpose-built immersion cooling designs must be considered.

4. Vendor considerations. In the past, immersion cooling was more of a puzzle-piece configuration. You would install only what you needed, retrofit parts of your data center, and operate independently with a given vendor. Today, immersion cooling solutions are integrated, encompassing your entire use case in a single immersion-cooled tank. As exciting as that might seem, this is also an excellent opportunity to get to know your vendors and what they can do for your data center. Although these new types of integrated cooling tanks are far easier to deploy into a data center, validating and challenging your vendors is still essential. During the evaluation process, ensure that the design fits your overall architecture and supports business operations moving forward.

5. Understanding cost, long-term and short-term. A look at the cost from a different perspective will be critical. That’s why we’re spending some extra time on this section. Because of the data center industry’s unprecedented rise in power consumption, we’ve seen a significant increase in operational and power costs. It has become a challenge for end-users to manage and conserve power in data centers.

This is where we need to look at immersion cooling in the near and long term. Generally speaking, air cooling systems cost less due to their more straightforward operation; however, the conversation around cost and cooling efficiency changes as rack density increases. Furthermore, our industry sees air-cooled data center systems’ clear economic and density limitations.

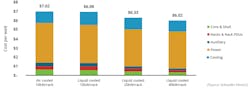

In a recent study by Schneider Electric, we see that for like densities (10kW/rack), the data center cost of an air-cooled and liquid-cooled data center is roughly equal. However, immersion cooling also enables IT compaction; with compaction, there is an opportunity for CapEx and OpEx savings. Compared to the traditional data center at 10 kW/rack, a 2x compaction (20 kW/rack) results in a first cost savings of 10%. When 4x compaction is assumed (40 kW/rack), savings go up to 14%.

If we examine cost more broadly, we’ll see areas where immersion cooling impacts technology alongside the business. In the most recent AFCOM State of the Data Center report for 2023, we noticed that OPEX and CAPEX costs were rising. According to the report, most respondents reported increased operational expenditures in 2022 (57%). The top drivers of OPEX increases are increasing equipment service and personnel costs, followed by rising energy costs. Similarly, most respondents (53%) reported increased capital expenditures in 2022. The top drivers of CAPEX increases include supply chain challenges, investment in existing facilities construction, investment in an IT refresh, and investment in new facilities construction.

Immersion cooling aims to reduce costs for both OPEX and CAPEX. For example, with a single-phase immersion cooling solution, you could see a 95% reduction in cooling OPEX. With a PUE of 1.03 (certified by a 3rd party), many will realize an ROI of less than one year, even considering power savings. Similarly, single-phase immersion cooling is rapidly deployable in any greenfield or raw data center environment. Operators will not need raised floors or cold aisles. Further, there is minimum retrofitting required for existing data centers. As a result, data center business leaders will see up to a 50% reduction in CAPEX building costs.

As an essential point, immersion cooling allows you to leverage a simpler compute ecosystem. There will be no moving parts, dust particles, and vibrations for immersion systems. Due to the liquid’s uniformity and viscosity, the single- phase immersion design has less thermal and mechanical stress. As a result of creating a more streamlined and simplified ecosystem, operators will see a 30% increase in hardware lifespan.

Because immersion cooling continues to gain traction in the data center industry, there are still a few unknowns about the technology. Let’s break down the five biggest myths related to immersion cooling.

TOP FIVE IMMERSION COOLING MYTHS

1. Water treatment and biodegradability. New integrated immersion cooling solutions leverage coils that do not use any special water treatment package that isn’t already used in the data center cooling loop. Furthermore, modern single- phase liquid cooling solutions work with fully biodegradable Again, there is no toxicity in any of the liquids being used.

2. Combustible oils. Today, most immersion liquids and even closed-loop liquids contain oils. If the oils are heated to evaporation or condensation, they technically are flammable; hence the labels affixed to those liquids. Enclosed loops and single-phase immersion solutions are the safest regarding combustibility. Solutions like Hypertec leverage a single-phase immersion cooling where servers are submerged in a thermally conductive dielectric liquid or coolant, a much better heat conductor than air, water, or oil. The coolant never changes state, and the liquid used in leading immersion cooling solutions will not be combustible.

3. Servicing, maintenance, and complexity of running air and immersion cooling This is where we look at next-generation immersion cooling solutions. Even in advanced retrofit situations, installation and serviceability are much easier than in previous immersion cooling designs. For immersion-born solutions, serviceability is easier via easy access to all critical components.

4. Air cooling is understood better and more widely used. For the time being, that is correct. However, modern immersion cooling systems rely on standard components in a data center, including power, connectivity, and, depending on the unit, a water loop.

In traditional data center deployments, engineers can use the return water instead of having a chilled water loop, reducing PUE. New immersion cooling systems are engineered to need hookups similar to traditional infrastructure found in data centers while providing better benefits.

Another critical point is that the data center industry is one of the last places where immersion cooling is being understood further. Interestingly, liquid cooling is utilized for other everyday use cases most people don’t know or forget about. Large-scale computing is one of the last energy- intensive operations not cooled by liquids. Everything from nuclear reactors, car engines, paper mills, and more are already cooled with liquids.

5. Risk with liquids being so close to the cabinets. There is a perception of risk with liquids in the data center where the liquid in the cabinet may cause an outage. However, the fluids used are dielectric, meaning they do not conduct electricity. Therefore, this liquid is safe for technology components. Also, the fluids used in single-phase immersion cooling do not leave residues.

Download the full report, New Data Center Efficiency Imperatives: How Immersion Cooling is Evolving Density and Design, featuring Hypertech, to learn more. In our next article, we'll present an immersion cooling readiness checklist, a few immersion cooling solutions, and explain how you can get started on the journey.