Understanding Data Center Temperature Guidelines

It is important to note that while closely followed by the industry, the TC9.9 data center temperature guidelines are only recommendations for the environmental operating ranges inside the data center, they are not a legal standard. ASHRAE also publishes many standards, such as 90.1 “Energy Standard for Buildings – Except for Low Rise Buildings” which is used as a reference and has been adopted by many state and local building departments. Prior to 2010, the 90.1 standard virtually exempted data centers. In 2010 the revised 90.1 standard included and mandated highly prescriptive methodologies for data center cooling systems. This concerned many data center designers and operators, especially the Internet and social media sites which utilized a wide variety of leading-edge cooling systems designed to minimize cooling energy. These designs broke with traditional data center cooling designs and could potentially conflict with the prescriptive requirements of 90.1, thus limiting rapidly developing innovations in the more advanced data center designs. We will examine 90.1 and 90.4 in more detail in the Standards section.

Data Center Frontier Special Report on Data Center Cooling – Download it Now

This article is the second in a series on data center cooling taken from the Data Center Frontier Special Report on Data Center Cooling Standards (Getting Ready for Revisions to the ASHRAE Standard)

Power Usage Effectiveness (PUE)

While the original version of PUE metric became more well known, it was criticized by some since “power” (kW) was an instantaneous measurement at a point in time, and some facilities claimed very low PUEs based on a power measurement made during the coldest day which minimized cooling energy. In 2011 it was updated to PUE version 2 (which is focused on annualized energy rather than power).

The revised 2011 version is also recognized by ASHRAE, as well as the US EPA and DOE, became part of basis of the Energy Star program, as well as becoming a globally accepted metric. It defined four PUE Categories (PUE0-3) and three specific points of measurement. Many data centers do not have energy meters at the specified points of measurement. To address this issue, PUE0 still was based on power, but required the highest power draw, typically during the warmer weather (highest PUE), rather than a best case, cold weather measurement, to negate incorrect PUE claims. The next three PUE categories were based on annualized energy (kWh). In particular PUE Category 1 (PUE1) specified the output of the UPS and was the most widely used point of measurement. The point of measurement for PUE2 (PDU output) and PUE3 (at the IT cabinet), represented more accurate measurement methods of the actual IT loads, but were harder and more expensive to implement. (see graphic).

The Green Grid clearly stated that the PUE metric was not intended to compare data centers, its purpose was only meant as a method to baseline and track changes to help data centers improve their own efficiency. The use of a mandatory PUE for compliance purposes in the 90.1-2013 building standard, and the proposed ASHRAE 90.4 Data Center Energy Efficiency standard, was in conflict with its intended purpose. The issue is also discussed in more detail in the section on ASHRAE standards.

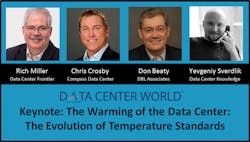

You can see Data Center Frontier’s Rich Miller, Compass Datacenters’ Chris Crosby, DLB Associates’ Don Beaty and moderator Yevgeniy Sverdlik of Data Center Knowledge in a lively discussion about the “warming of the data center,” including how we got here, the impact of current acceptable and allowable temperature ranges on operations and performance, and the controversy surrounding the proposed 90.4 standard at Data Center World on March 17, 2016

Understanding Temperature References

In order to discuss evolving operating temperatures it is important to examine the differences of dry bulb, wet bulb and dew point temperatures.

Dry Bulb

This is the most commonly used type of thermometer referenced in the specification of IT equipment operating ranges. The “dry bulb” thermometer (analog or digital), readings are unaffected by the humidity level of the air.

Wet Bulb

In contrast, there is also a “wet bulb” thermometer, wherein the “bulb” (or sensing element) is covered with a water-saturated material such as cotton wick and a standardized velocity of air flows past it to cause evaporation, cooling the thermometer bulb (a device known as a sling psychrometer). The rate of evaporation and related cooling effect is directly affected by the moisture content of the air. As a result, at 100% RH the air is saturated and the water in the wick will not evaporate and will equal the reading of a “dry bulb” thermometer. However, at lower humidity levels, the dryer the air, the faster the moisture in the wick will evaporate, causing a lower reading by the “wet bulb” thermometer, when compared to a “dry bulb” thermometer. Wet bulb temperatures are commonly used as a reference for calculating the cooling unit’s capacity (related to latent heat load. i.e. condensation- see Dew Point below), while “dry bulb” temperatures are used to specify sensible cooling capacity. Wet bulb temperatures are also used to project the performance of the external heat rejection systems, such as evaporative cooling towers, or adiabatic cooling systems. However, for non-evaporative systems, such as fluid coolers or refrigerant condensers, dry bulb temperatures are used.

Dew Point

Dew point temperature represents the point at which water vapor has reached the saturation point (100% RH). This temperature varies, and its effect can be commonly seen when condensation forms on an object that is colder than the dew point. This is an obvious concern for IT equipment. When reviewing common IT equipment operating specifications, it should be noted that the humidity range is specified as “non-condensing”.

Dew point considerations also become important to address and minimize latent heat loads on cooling systems, such as the typical CRAC/CRAH unit whose cooling coil operates below the dew point, therefore inherently dehumidifies while cooling (latent cooling – requiring energy). This then requires the humidification system to use more energy to replace the moisture removed by the cooling coil. New cooling system can avoid this double-sided waste of energy by implementing dew point control.

Recommended vs Allowable Temperatures

As of 2011, the “recommended” temperature range remained unchanged at 64.4-80.4°F (18-27°C). While the new A1-A2 “allowable” ranges surprised many IT and Facility personnel, it was the upper ranges of the A3 and A4 temperatures that really shocked the industry.

While meant to provide more information and options, the new expanded “allowable” data center classes significantly complicated the decision process for the data center operator when trying to balance the need to optimize efficiency, reduce total cost of ownership, address reliability issues, and improve performance.

Temperatures Measurements – Room vs IT Inlet

As indicated in the summary of Thermal Guidelines, the temperature of the “room” was originally used as the basis for measurement. However, “room” temperatures were never truly meaningful, since the temperatures could vary greatly in different areas across the whitespace. Fortunately, in 2008, there was an important, but often overlooked change in where the temperature was measured. The 2nd edition referenced the temperature of the “air entering IT equipment”. This highlighted the need to understand and address airflow management issues in response to the higher IT equipment power densities, and the recommendation of the Cold-Aisle / Hot-Aisle cabinet layout.

In the 2012 guidelines there were also additional recommendations for the locations for monitoring the temperatures in the cold aisle. These also covered placing sensors inside the face of cabinet and the position and number of sensors per cabinet, (depending on the power density of the cabinets and IT equipment). While this provided better guidance on where to monitor the temperatures, very few facility managers had temperature monitoring in the cold aisles, much less inside the racks. Moreover, it did not directly address how to control the intake temperatures of the IT hardware.

ASHRAE vs NEBS Environmental Specifications

Although ASHRAE Thermal Guidelines are well known in the data center, the telecommunications industry created environmental parameters long before TC9.9 released the first edition in 2004. The NEBS* environmental specifications provides a set of physical, environmental, and electrical requirements for local exchanges of telephone system carriers. The NEBS specifications have evolved and been revised many times and its ownership has changed as telecommunications companies reorganized. Nonetheless, it and its predecessors effectively defined the standards for ensuring reliable equipment operation of the US telephone system for over a hundred years.

In fact, NEBS is referenced in the ASHRAE Thermal Guidelines. The NEBS “recommended” temperature range 64.4°F – 80.6°F (18-27°C), existed well before the original TC9.9 guidelines, but was not until 2008 in the 2nd edition, that the Thermal Guidelines were expanded to the same values. More interestingly, in 2011, the TC9.9 new A3 specifications now matched the long standing NEBS allowable temperature range of 41-104F. However, it is the NEBS allowable humidity range that would shock most data center operators 5%-85% RH. The related note in the ASHRAE Thermal Guideless states: “Generally accepted telecom practice; the major regional service providers have shut down almost all humidification based on Telecordia research”.

Revised Low Humidity Ranges and Risk of Static Discharge

In 2015 TC9.9 completed a study of the risk of Electro-static Discharge “ESD” and discovered that lower humidity did not significantly increase the risk of damage from ESD, as long as proper grounding was used when servicing IT equipment. It is expected that the 2016 edition of the Thermal Guidelines will expand the allowable low humidity level down to 8%RH. This will allow a substantial energy saving, by avoiding the need to use humidification systems to raise humidity unnecessarily.

*NEBS Footnote: NEBS (previous known as Network Equipment-Building System) is currently owned and maintained by Telecorida which was formerly known as Bell Communications Research, Inc. or Bellcore. It was the telecommunication research and development company created as part of the break-up of the American Telephone and Telegraph Company (AT&T).

Next week we will explore controlling supply and IT air intake temperatures. If you prefer you can download the Data Center Frontier Special Report on Data Center Cooling Standards in PDF format from the Data Center Frontier White Paper Library courtesy of Compass Data Centers. Click here for a copy of the report.