Power Densities Edge Higher, But AI Could Accelerate the Trend

Data center rack density is trending higher, prompted by growing adoption of powerful hardware to support artificial intelligence applications. It’s an ongoing trend with a new wrinkle, as industry observers see a growing opportunity for specialists in high-density hosting, perhaps boosted by the rise of edge computing.

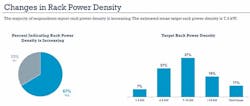

Increases in rack density are being seen broadly, with 67 percent of data center operators seeing increasing densities, according to the recent State of the Data Center survey by AFCOM. With an average power density of about 7 kilowatts per rack, the report found that the vast majority of data centers have few problems managing their IT workloads with traditional air cooling methods.

But there are also growing pockets of extreme density, as AI and cloud applications boost adoption of advanced hardware, including GPUs, FPGAs and ASICs. The good news is that these extreme workloads are focused on hyperscale operators and companies with experience in high performance computing (HPC), who are well equipped to manage “hot spots” in their data centers.

“We’ve definitely noticed the momentum just over the last 12 months,” said Sean Holzknecht, President of Colovore, a high-density cooling specialist in Santa Clara, Calif. “This still feels like the infancy stage for this, and it’s poised to take off. AI plays into all of this.”

A key question is whether high-density racks will become more commonplace in multi-tenant data centers, especially as more providers target hyperscale customers. Google, Facebook, Microsoft and Salesforce are all working to use machine learning to build intelligence into their online services.

Air Cooling Still Good for Most

At Data Center Frontier, we’ve been tracking trends in rack densities for years, with our last major update in April 2017. Over the last 12 months, we’ve discussed trends in density at conferences, site visits at data centers, and interviews with numerous industry thought leaders.

The prevailing trend is that power densities are moving slightly higher in enterprise data centers, and densifying more quickly in hyperscale facilities. Here’s a summary of the AFCOM survey, which includes a mix of end users, including some with on-premises data centers.

Data on cooling trends from the recent AFCOm State of the Data Center Survey. (Image: AFCOM)

This aligns with the discussion in last month’s DCF Executive Roundtable, in which several leading experts in data center cooling predicted the current trend of gradually rising densities will continue.

“The bottom line: Higher densities are coming – and with them alternative cooling technologies – but it will be a gradual evolution,” said Jack Pouchet, Vice President of Market Development at Vertiv. “Sudden, drastic change would require fundamental changes to a data center’s form factor, and in most cases that is not going to happen.”

“Rack density is growing slightly, but on average not to the levels served by direct liquid cooling,” said Dennis VanLith, Senior Director Global Product Management at Chatsworth Products, who said most users average between 8 kW and 16 kW per rack. “So rack densities will probably not climb significantly, but the amount of compute power (utilization) per rack will.

“The only wild card here is AI,” VanLith added. “Typically, the PCI cards required to drive AI run at 100% power when models are being trained. When AI takes off, expect extremely large and sustained loads on the data center and system that will increase the average workload. However, I still think direct liquid cooling will be extremely niche, and only for very specific use cases. With power densities averaging below 16 kW, there is no need for liquid cooling.”

Liquid Cooling Still Coming … But Slowly

So when will liquid cooling gain greater traction? That has been the focus of a number of panels at recent data center conferences, as industry executives seek insights on advanced cooling solutions to prepare for the impact of AI hardware. That included a panel at a CAPRE event in Ashburn, Virginia where a panel focused on the potential for a future shift to liquid-cooled workloads.

The panel agreed that current use of liquid cooling is focused on niches, and broad adoption will come slowly.

“I think we’re going to get there, just not in 2018,” said Sam Sheehan, Director of Mechanical Engineering at CCG Facilities Integration. “We’ve been seeing more higher density, and a leaning towards water cooling. We’re seeing systems that design an entire row of liquid cooled cabinets.

Servers immersed in a liquid cooling solution from Green Revolution Cooling. (Photo: Green Revolution)

“The tough part is convincing the client that water is the way to go,” she continued. “There’s a stigma – ‘hydrophobia’ we call it. People don’t want water in the data center. We’re not going to see it as much in colo. Containment solutions have really come a long way.”

Sheehan noted that air cooling can be used at densities beyond 20 kW, but only if you are determined.

“You can get there, but your data center will be a wind tunnel,” she said. “Unless you’re in a data center, you may have a hard time understanding how difficult it is to work in that environment.”

Other panelists said that liquid cooling use remains limited, and were skeptical that rising densities would change that anytime soon.

“I think we’re a ways off,” said Steve Altizer, President of Compu Dynamics. “Most data centers are at 200 watts per square foot. It’s just not a very big market right now. If you doubled the amount of liquid cooling, it would go from 0.5 percent to 1 percent. It’s going to be a slow-moving technology.”

Vali Sorell, President of Sorell Engineering, said that for most firms, the inflection point for switching from air cooling to liquid-cooled solutions occurs past 25 kW per rack. When that happens, users have the opportunity to slim down their infrastructure when using “warm water cooling.”

“You don’t need chilled water when you’re dealing with liquid cooling,” said Sorell. “It all comes down to the surface temperature of the chip. You can cool that with much warmer water. You can eliminate the central plant, which has been the most unreliable aspect of the data center.”

The Geography of High Density

High-density computing is about geography as well as technology, with pockets of high-density customers in markets like Silicon Valley and Houston.

The growth of cloud computing and AI is prompting customers to put more equipment into their cabinets and seek out facilities that can cool them. This issue is coming to the fore in markets with a limited supply of data center space, like Santa Clara, placing a premium on getting the most mileage out of every rack. Several recent developments highlight this demand for high-density space in Silicon Valley:

- Vantage Data Centers recently signed a customer requiring 30 kWs of power per cabinet in its new V6 data center in Santa Clara, which will bring water directly to the rack.

- A customer with a high-density requirement pre-leased 2 megawatts of space at Colovore, a Santa Clara provider specializing in high-density computing.

- CoreSite added additional power infrastructure at one of its Santa Clara data centers to provide additional capacity for an enterprise customer that was expanding its power usage by boosting the density within its existing data hall, rather than leasing additional space.

Colovore says the 66 racks in its newest 2 megawatt phase are averaging 22.5 kW per rack, with regular spikes as high as 27 kW. The customer, who previously used 5 GPU servers per cabinet, now uses 10 in each cabinet.

High-density racks inside the Colovore data center in Santa Clara, Calif. (Photo: Rich Miller)

“When we launched four years ago, 20kW seemed an outlandish goal,” said Holzknecht. “It just took a while for the market to catch up.” Colovore, which uses water-cooled rear-door chiller units, is now designing its facilities to support 35 kW a rack.

Another strong market for high-density workloads is Houston, where energy companies deploy significant HPC gear to do seismic modeling to research sites for oil and gas exploration. CyrusOne got its start offering high-density colocation in Houston, and the region has seen some of the earliest deployments of new cooling technology. In 2013, geoscience specialist CGG became one of the first companies to deploy an entire immersion data center.

Rob Morris, the Managing Partner at Skybox Data Centers, has tracked the evolution of Houston’s high-density computing sector, and says cooling strategies continue to evolve.

“Five to six years ago, we saw a number of companies that went with mineral oil solutions,” said Morris. “On their new installations, they’re staying away from that and moving to in-cabinet or in-chassis solutions. The efficiency those (immersion) solutions provide is incredible, but the oil is a little messy, and they had to maintain them more often than they thought.

“What we have seen grow by leaps and bounds is the in-rack cooling solutions, providing water in the rack or right to the chip,” Morris continued. “In Houston, it’s very rare that we don’t see a current or future requirement for water to the rack. Water to the rack could become a standard in the next five years or so. as the heat from servers continues to increase, FPGAs are absolute power hogs, but they are so much more efficient on the compute side.”

Will Density Get Distributed?

A wildcard in the equation is the emergence of edge computing – distributed data center capacity to support the increased use of consumer mobile devices, especially consumption of video and virtual reality content and the growth of sensors as part of the Internet of Things. Artificial intelligence emerged as a major edge use case in 2017, while the 800-pound-gorilla of edge traffic – the autonomous car – looms on the horizon.

The NVIDIA DGX-1 is packed with 8 Tesla P100 GPUs, providing 170 teraflops of computing power in a 3U form factor. (Photo: Rich Miller)

In a world in which AI becomes ubiquitous, what kind of computing architecture will be required? The Colovore team has specialized in machine learning workloads, and believes an “AI everywhere” world will require a distributed network of small high-density data centers. Many AI jobs, especially inference workloads, can be handled by mobile devices and edge-based compute servers.

Peter Harrison, the Chief Technical Officer of Colovore, says running deep analytics on AI data will be difficult in micro-modular edge data centers.

“What cell tower has the compute capability to support a DGX?” Harrison asked, referencing the popular AI server from NVIDIA. “Unless the fundamental of silicon changes, it’s going to require backhaul to specialized facilities.

“If AI takes off, you have the potential for not just two types of data centers, but three or four types with different purposes,” said Harrison. “You’ll have centers that are specialized in high-density computing backhauling to a cheaper location where you can have lower density servers and storage.”

As hyperscalers use more AI hardware, they may add specialized facilities on their cloud campuses, much as Facebook has custom cold storage data center at several locations. The same model could eventually apply to large multi-tenant campuses, especially those seeking to land hyperscale deals.

About the Author