NVIDIA Unveils Liquid-Cooled GPUs, With Equinix as Early Adopter

In a move to enable wider use of liquid cooling, NVIDIA is introducing its first data center GPU with direct chip liquid cooling. NVIDIA is touting the A100 PCIe GPU as a sustainability tool to deploy green data centers, rather than a strategy to boost performance.

By expanding the availability of liquid-cooled GPUs, NVIDIA is seeking to reduce the energy impact of artificial intelligence (AI) and other high-density applications, which pose challenges for data center design and management. Powerful new hardware for AI workloads is packing more computing power into each piece of equipment, boosting the power density – the amount of electricity used by servers and storage in a rack or cabinet – and the accompanying heat.

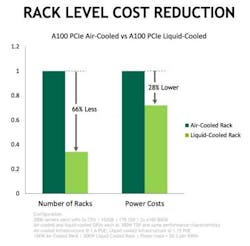

NVIDIA says the liquid-cooled NVIDIA A100 PCIe GPU will deliver the same high performance with 30% less energy than air-cooled hardware. Because liquid cooling also allows for higher rack density, NVIDIA says the new GPUs will also allow data center operators to use less floor space to deliver the same capacity.

“All of these combine to deliver the infrastructure of the data center of the future that handles these massive workloads,” said Ian Buck, NVIDIA VP for Hyperscale and HPC. “We have reimagined the data center.”

Equinix is Early Adopter

The liquid-cooled NVIDIA GPUs are running at Equinix in the company’s Co-Innovation Facility (CIF) in Ashburn, Virginia, where the huge digital infrastructure company is test-driving technologies that will drive the data center of the future.

“This marks the first liquid-cooled GPU introduced to our lab, and that’s exciting for us because our customers are hungry for sustainable ways to harness AI,” said Zac Smith, Global Head of Edge Infrastructure Services at Equinix, which is the largest provider of colocation and interconnection services, with 10,000 customers and 240 data centers across the globe.

Equinix said last year that it hopes to use liquid cooling technology in its Equinix Metal service to create a high-density, energy efficient computing platform. NVIDIA

Like most data centers, Equinix uses air to cool the servers in its data centers, where it leases space to customers. Liquid cooling is used primarily in technical computing, enabling the use of more powerful hardware that uses more energy.

Bringing Liquid Cooling to the Mass Market

End users and system builders have adapted GPUs for liquid cooling for some time, but NVIDIA’s move will make these systems more widely available. The announcement was part of NVIDIA’s presentation for the Computex 2022 trade show in Taiwan.

NVIDIA says its liquid-cooled A100 PCIe GPUs will be supported in mainstream servers by at least a dozen system builders, with the first shipping in the third quarter of this year. They include ASUS, ASRock Rack, Foxconn Industrial Internet, GIGABYTE, H3C, Inspur, Inventec, Nettrix, QCT, Supermicro, Wiwynn and xFusion, according to an NVIDIA blog post.

The liquid-cooled GPUs will be available in the fall as a PCIe card and will ship from OEMs with the HGX A100 server. The company plans to follow up the A100 PCIe card with a version next year using the new H100 Tensor Core GPU based on the NVIDIA Hopper architecture, the key building blocks for its vision to transform data centers into “AI factories,” unleashing new frontiers in technical computing.

“We plan to support liquid cooling in our high-performance data center GPUs and our NVIDIA HGX platforms for the foreseeable future,” NVIDIA said. “For fast adoption, today’s liquid-cooled GPUs deliver the same performance for less energy. In the future, we expect these cards will provide an option of getting more performance for the same energy, something users say they want.”

NVIDIA says its liquid-cooled GPUs can drive a PUE (power usage effectiveness, a leading energy efficiency metric) of 1.15.

Industry Leaders Invest in Liquid Cooling

We’ve been tracking progress in rack density and liquid cooling adoption for years at Data Center Frontier as part of our focus on new technologies and how they may transform the data center.

Cold water is often used to chill air in room-level and row-level systems, and these systems are widely adopted in data centers. The real shift is in designs that bring liquids into the server chassis to cool chips and components. This can be done through enclosed systems featuring pipes and plates, or by immersing servers in fluids. Some vendors integrate water cooling into the rear-door of a rack or cabinet.

Bringing water to the chip enables even greater efficiency, creating tightly-designed and controlled environments that focus the cooling as close as possible to the heat- generating components. This technique can cool components using warmer water temperatures.

In 2019 Google decided to shift to liquid cooling with parts of its data center infrastructure to support its latest custom hardware for artificial intelligence. Alibaba and other Chinese hyperscale companies have also adopted liquid cooling, and last year Microsoft said it is using immersion-cooled servers in production, and believes the technology can help boost the capacity and efficiency of its cloud data centers.

Intel recently introduced an open intellectual property immersion liquid cooling solution and reference design, initiated in Taiwan. (Credit: Intel Corporation)

The growing importance of liquid cooling to technology vendors is reflected in Intel’s announcement last week that it plans to invest more than $700 million to create a 200,000-square-foot, research and development “mega lab” in Hillsboro, Oregon focused on innovative data center technologies, including liquid cooling. The company also announced an open intellectual property (open IP) immersion liquid cooling solution and reference design, with the initial design proof of concept initiated in Taiwan.

The Open Compute Project (OCP) has also created an Advanced Cooling Solutions project to boost collaboration on liquid cooling for the hyperscale ecosystem. A similar initiative is underway at Open19, which serves as as alternative to OCP focused on edge and enterprise computing.

Like most data center operators, Equinix is focused on climate change and reducing the impact of its operations on the environment. More hot processors require more cooling, and both consume more energy. Smith, who is also president of the Open19 foundation, says the group’s designs could position Equinix Metal – a cloud-style service where Equinix manages the hardware and leases capacity on bare metal servers – as a path for customers to deploy AI workloads sustainably and quickly through a web interface.

If Equinix succeeds, that could prompt other colocation providers to respond with similar services, as data center customers look beyond on-premises facilities that may struggle to support higher rack density and liquid cooling infrastructure.

About the Author