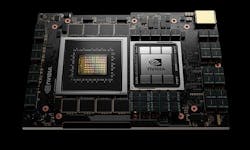

NVIDIA Launches Grace CPU to Bring Arm Efficiency to Massive AI Workloads

The King of the GPUs is getting into the CPU business. NVIDIA is entering the CPU market with an Arm-based processor that will be tightly integrated with its next-generation GPUs.

The NVIDIA Grace CPU was introduced today at the NVIDIA GTC 2021 conference, and made an immediate splash in the high performance computing (HPC) world, as Grace will be used in powerful new supercomputers being developed by Los Alamos National Labs and the Swiss National Supercomputing Centre.

With its new CPU, NVIDIA is dipping a toe in the water of a market dominated by Intel and AMD. For now, it is targeting the very top of the HPC sector, as Grace is designed for AI supercomputing involving enormous datasets. But Grace offers an all-NVIDIA solution that integrates CPUs and GPUs, a leading deployment model for accelerated HPC and AI computing systems, which have typically featured Intel and AMD CPUs.

“Today we’re introducing a new type of computer,” said Jensen Huang, founder and CEO of NVIDIA, in his keynote at GTC 2021.

NVIDIA’s goal is to use its integrated system to break new ground at the top of the market. For now, Grace will be implemented in the world’s most advanced computing systems, with the ability to adapt the Arm hardware to their needs – something that doesn’t broadly exist in the world of enterprise servers, which is dominated by x86. General availability of the Grace CPU is not expected until the beginning of 2023.

What’s clear is that Grace reflects NVIDIA’s ambition to play an even larger role as the tech landscape is transformed by AI. It is playing a long game, positioning itself for the evolution of massive datasets and AI models, which over time could make more data centers look more like supercomputing operations – what NVIDIA calls the “unified accelerated data center.” It also offers insights into the importance of Arm in NVIDIA’s roadmap.

“Leading-edge AI and data science are pushing today’s computer architecture beyond its limits – processing unthinkable amounts of data,” said Huang. “Using licensed Arm IP, NVIDIA has designed Grace as a CPU specifically for giant-scale AI and HPC. Coupled with the GPU and DPU, Grace gives us the third foundational technology for computing, and the ability to re-architect the data center to advance AI.”

Larger AI Models Require More Compute, Energy

In the GTC keynote, NVIDIA Huang said the giant computer models that power artificial intelligence are exploding in size, testing the limits of current computing architectures. Addressing this challenge is the theme for GTC 21, where NVIDIA is outlining product updates across the many verticals it serves, including HPC, enterprise computing, the automotive sector and software simulation for “digital twins.”

The Grace CPU is named for computing pioneer Grace Hopper, and based on the energy-efficient Arm microarchitecture found in billions of smartphones and edge computing devices. An intriguing element of the announcement is how Arm might improve the energy efficiency of the most powerful HPC hardware. Even modest gains in efficiency at the chip level can be amplified across massive systems,

NVIDIA is introducing the Grace CPU as the volume of data and size of AI models are growing exponentially. Today’s largest AI models include billions of parameters and are doubling every two-and-a-half months.

NVIDIA says a Grace-based system will be able to train a one trillion parameter natural language processing (NLP) model 10 times faster than today’s state-of-the-art NVIDIA DGX-based systems, which run on x86 CPUs. In addition, NVIDIA NVLink interconnect technology will provide up to 900 GB/s connection between the NVIDIA Grace CPU and NVIDIA GPUs to enable higher aggregate bandwidth.

“With an innovative balance of memory bandwidth and capacity, this next-generation system will shape our institution’s computing strategy,” said Thom Mason, Director of Los Alamos National Laboratory (LANL), a leading supercomputing center for the U.S. Department of Energy. “Thanks to NVIDIA’s new Grace CPU, we’ll be able to deliver advanced scientific research using high-fidelity 3D simulations and analytics with data sets that are larger than previously possible.”

LANL will integrate Grace into its design for a “leadership-class advanced technology supercomputer” scheduled to be delivered in 2023. Meanwhile, the Swiss National Computing Centre (CSCS) will work with NVIDIA and Hewlett Packard to create its Alps supercomputer in 2023.

Artist’s rendering of Alps, set to be the world’s most powerful AI-capable supercomputer,

announced by The Swiss National Computing Centre (CSCS), Hewlett Packard Enterprise (HPE) and NVIDIA. (Image: NVIDIA)

”NVIDIA’s novel Grace CPU allows us to converge AI technologies and classic supercomputing for solving some of the hardest problems in computational science,” said CSCS director Prof. Thomas Schulthess. “We are excited to make the new NVIDIA CPU available for our users in Switzerland and globally for processing and analyzing massive and complex scientific data sets.”

A Milestone for Arm in the Data Center?

Over the past decade, the notion of using Arm to transform data center energy efficiency has been a big vision with limited results. NVIDIA hopes to change that with its planned $40 billion acquisition of Arm, which as we noted last year “is likely to have broad impact on how the world’s IT users harness artificial intelligence.” Arm CEO Simon Segars says the debut of Grace illustrates how “Arm drives innovation in incredible new ways every day.”

“NVIDIA’s introduction of the Grace data center CPU illustrates clearly how Arm’s licensing model enables an important invention, one that will further support the incredible work of AI researchers and scientists everywhere,” said Segars.

One aspect of Arm that has complicated its efforts in the server market is that it uses a different instruction set than Intel’s dominant x86 servers, and thus requires specific software. That means a bigger commitment from customers seeking to benefit from the better efficiency of the Arm architecture. At GTC, NVIDIA is announcing a series of partnerships to expand the support for trhe Arm architecture.

“Arm’s ecosystem of technology companies from around the world are ready to take Arm-based products into new markets like cloud, supercomputing, PC and autonomous systems,” said Huang. “With the new partnerships announced today, we’re taking important steps to expand the Arm ecosystem beyond mobile and embedded.”

About the Author