NVIDIA Buys Arm: What It Means for Data Centers, AI and the Server Sector

NVIDIA’s $40 billion acquisition of Arm comes at a particularly dynamic moment for data center hardware, and the deal is likely to have broad impact on how the world’s IT users harness artificial intelligence.

“We are joining arms with Arm to create the leading computing company for the age of AI,” said NVIDIA CEO Jensen Huang. “AI is the most powerful technology force of our time. Someday, trillions of computers running AI will create a new internet — the Internet-of-Things — thousands of times bigger than today’s internet-of-people.”

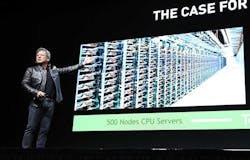

A key question is whether Arm’s famous low-power chip architecture, which is widely deployed in smartphones and other portable devices, can finally have a similar impact in servers. Over the past decade, the notion of using Arm to transform data center energy efficiency has been a big vision with small results. Progress has improved over the last several years, and NVIDIA hopes to finally make it happen.

“I’m super excited to focus a lot of energy around turning Arm into a world class data center CPU,” said Huang. “We’re rather unique in being able to take a CPU that has been really refined over time and turn it into a computing platform from end to end – the system, the software, all of the algorithms, and all of the frameworks.

“The amount of computer science horsepower that is inside this company would be quite extraordinary,” he continued. “The beautiful thing is that there are going to be so many different types of data centers in the future. It is not possible for one company to build every single version of them. But we will have the entire network of partners around Arm that can take the architectures we come up with and …. we would like the ecosystem to be as rich as possible, with as many options as possible.”

“That is a massive distributed computing problem that requires consistency of architecture, that requires consistency of software stack and I think the combination of Arm and Nvidia can uniquely solve that problem,” said Simon Segars, CEO of Arm. “And that for me is one of the things that’s really exciting and can come from this combination.”

Here’s a look at how analysts are viewing some of the key issues around the NVIRIA-Arm combination.

Impact on Servers and Artificial Intelligence

“This acquisition gives NVIDIA control of one of the planet’s most strategically important semiconductor designers, though how well ARM’s design-license business model works alongside NVIDIA’s product business remains to be seen,” wrote Jack Clark, the Policy Director at Open AI, in his Import AI newsletter.

“For the next few years, we can expect the majority of AI systems to be trained on GPUS and specialized hardware (e.g, TPUs, Graphcore). ARM’s RISC-architecture chips don’t lend themselves as well to the sort of massively parallelized computing operations required to train AI systems efficiently. But NVIDIA has plans to change this, as it plans to ‘build a world-class [ARM] AI research facility, supporting developments in healthcare, life sciences, robotics, self-driving cars and other fields.’

Stacey Higginbotham tracks the Internet of Things at Stacey on IoT, and has long experience tracking both Arm and NVIDIA’s progress in distributed computing.

“This deal is about the data center and the overarching consolidation in the chip sector as opposed to something deeply meaningful for the Internet of Things,” observed Higginbotham. “The biggest defining feature of GPUs is that they are power-hungry. Yes, they are more efficient even at higher power consumption for doing loads like machine learning, but the overall power consumption associated with training and running neural networks is significant.

“It’s possible that ARM’s focus on efficiency and doing as much computation on as low power footprint as possible could influence Nvidia’s design decisions and help build processors for machine learning that won’t require a new power plant to keep up with demand,” Higginbotham added. “Inside the data center think of this deal as a way to give Nvidia independence from Intel, AMD, and other x86 architectures and potentially a way to bring the power consumption of data-center computing down.”

One aspect of Arm that has complicated its efforts in the server market is that it uses a different instruction set than Intel’s dominant x86 servers, and thus requires specific software. That means a bigger commitment from customers seeking to benefit from the better efficiency of the Arm architecture.

“Software is a key part of the combined company’s vision,” writes Patrick Moorhead of Moor Insights and Strategy. “Arm CEO Simon Segars framed it well when he told me, ‘We’re moving into a world where software doesn’t just run in one place. Your application today might run in the cloud, it might run on your phone, and there might be some embedded application running on a device, but I think increasingly and with the rollout of 5G and with some of the technologies that Jensen was just talking about this kind of application will become spread across all of those places. Delivering that and managing that there’s a huge task to do. And it all requires a computing architecture that can scale from the tiniest sets all the way up to the biggest supercomputer, and we can address that.’”

Competition and Business Model

One of the biggest questions is what the deal will mean for the many chip industry players who license Arm’s technology, who now find a key supplier owned by a rival.

“The intention is to continue the business model as is and continue serving the world’s entire semiconductor industry, only with an expanded set of technology as part of Nvidia,” said Arm CEO Segars. “We will be able to invest even more in our core technology. We will be able to work together to create new solutions and make that available to anyone who wants to go and build a chip or system with it. That is the intent, and people can choose not to believe it, and we will have to prove it over time. And that’s what we are going to do.”

Analysts see the deal prompting shifts in the strategies of Arm licensees.

“ARM’s technology is used by hundreds and hundreds of companies, including many prominent rivals to Nvidia, such as chip maker Qualcomm,” veteran chip watcher Tiernan Ray wrote at The Technology Letter. “It’s highly likely those companies will now go looking for a new standard on which to base their chips going forward. Nvidia CEO Jensen Huang pledged in Sunday evening’s announcement to maintain ARM’s open licensing policy, to continue to sell technology to all companies around the world. But I would expect most will start looking for other alternatives.

“The most likely candidate is the open-source RISC V initiative,” Ray said. “That effort, spearheaded by University of California at Berkeley professor Dave Patterson, aims to create an ecosystem of chip design that is totally open, akin to Linux in the operating system software world. RISC V has momentum. Even Nvidia has worked with the standard.

“With Nvidia now taking control of ARM, Qualcomm and other companies can be expected to get behind the RISC V effort in order to have a neutral standard to work with rather than be beholden to an enemy,” Ray concluded. “The result may be an amazing opening-up of the chip industry, an open-source moment rather like what happened with software in the Internet age.”

About the Author