The rise of artificial intelligence is transforming the business world. It could shake up the data center along the way.

Powerful new hardware for artificial intelligence (AI) workloads have the potential to reshape the design of data centers and how they are cooled. This week’s Hot Chips conference at Stanford University showcased a number of startups offering new takes on customized AI silicon, as well as new offerings from the incumbents.

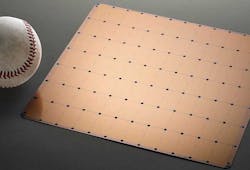

The most startling new design came from Cerebras Systems, which came out of stealth mode with a chip that completely rethinks the form factor for data center computing. The Cerebras Wafer-Scale Engine (WSE) is the largest chip ever built, at nearly 9 inches in width. At 46.2 square millimeters, the WSE is 56 times larger than the largest graphics processing unit (GPU).

Is bigger better? Cerebras says size is “profoundly important” and its larger chips will process information more quickly, reducing the time it takes AI researchers to train algorithms for new tasks.

The Cerebras design offers a radical new take on the future of AI hardware. Its first products have yet to hit the market, and analysts are keen to see if performance testing validates Cerebras’ claims about its capabilities.

Cooling 15 Kilowatts per Chip

If it succeeds, Cerebras will push the existing boundaries of high-density computing, a trend which is already beginning to create both opportunities and challenges for data center operators. A single WSE contains has 400,000 cores and 1.2 trillion transistors and uses 15 kilowatts of power.

I will repeat for clarity – a single WSE uses 15 kilowatts of power. For comparison, a recent survey by AFCOM found users were averaging 7.3 kilowatts of power for an entire rack, which can hold as many as 40 servers. Hyperscale providers average about 10 to 12 kilowatts per rack.

The heat thrown off by the Cerebras chips will require a different approach to cooling, as well as the server chassis. The WSE will be packaged as a server appliance, which will include a liquid cooling system that reportedly features a cold plate fed by a series of pipes, with the chip positioned vertically in the chassis to better cool the entire surface of the huge chip.

A look at the manufacturing process for the Cerebras Wafer Scale Engine (WSE), which was fabricated at TSMC. (Image: Cerebras)

Most servers are designed to use air cooling, and thus most data centers are designed to use air cooling. A broad shift to liquid cooling would prompt data center operators to support water to the rack, which is often delivered through a system of pipes under a raised floor.

Google’s decision to shift to liquid cooling with its latest hardware for artificial intelligence raised expectations that others might follow. Alibaba and other Chinese hyperscale companies have adopted liquid cooling.

“Designed from the ground up for AI work, the Cerebras WSE contains fundamental innovations that advance the state-of-the-art by solving decades-old technical challenges that limited chip size—such as cross-reticle connectivity, yield, power delivery, and packaging,” said Andrew Feldman, founder and CEO of Cerebras Systems. “Every architectural decision was made to optimize performance for AI work. The result is that the Cerebras WSE delivers, depending on workload, hundreds or thousands of times the performance of existing solutions at a tiny fraction of the power draw and space.”

Data center observers know Feldman as the founder and CEO of SeaMicro, an innovative server startup that packed more than 750 low-power Intel Atom chips into a single server chassis.

Much of the secret sauce for SeaMicro was in the networking fabric that tied those cores together. Thus, it’s not surprise that Cerebras features an interprocessor fabric called Swarm that combines massive bandwidth and low latency. The company’s investors include two networking pioneers, Andy Bechtolsheim and Nick McKeown.

For deep dives into Cerebras and its technology, see additional coverage in Fortune, TechCrunch, The New York Times and Wired.

New Form Factors Bring More Density, Cooling Challenges

We’ve been tracking progress in rack density and liquid adoption for years at Data Center Frontier as part of our focus on new technologies and how they may transform the data center. New hardware for AI workloads is packing more computing power into each piece of equipment, boosting the power density – the amount of electricity used by servers and storage in a rack or cabinet – and the accompanying heat.

Cerebras is one of a group of startups building AI chips and hardware. The arrival of startup silicon on the AI computing market follows several years of intense competition between chip market leader Intel Corp. and rivals including NVIDIA, AMD and several players advancing ARM technology. Intel continues to hold a dominant position in the enterprise computing space, but the development of powerful new hardware optimized for specific workloads has been a major trend in the high performance computing (HPC) sector.

This won’t be the first time that the data center market has had to reckon with new form factors and higher-density. The introduction of blade servers packed dozens of server boards into each chassis, bringing higher heat loads that many data center managers struggled to manage. The rise of the Open Compute Project also introduced new standards, including a 21-inch rack that was slightly wider than the traditional 19-inch rack.

There’s also the question of whether the rise of powerful AI appliances will compress more computing power into a smaller space, prompting redesigns or retrofits for liquid cooling, or whether high-density will be spread out within existing facilities to distribute their impact on existing power and cooling infrastructure.

For further reading, here are articles that summarize some of the key issues in the evolution of high-density hardware and how the data center industry has adapted:

About the Author