Nvidia is Seeking to Redefine Data Center Acceleration

Jensen Huang, the CEO of chipmaker Nvidia, has a vision for data centers that run faster and greener. As he outlined stellar first-quarter earnings, Huang asserted that the orders driving Nvidia's sales numbers are a downpayment on a much longer transition in the data center sector, in which the shift to specialized computing transforms the industry.

"Generative AI is driving exponential growth in compute requirements," said Huang in Nvidia's earnings call. "You're seeing the beginning of a 10-year transition to basically recycle or reclaim the world's data centers and build it out as accelerated computing. You'll have a pretty dramatic shift in the spend of the data center from traditional computing, and to accelerated computing."

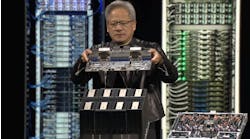

Although generative AI is driving the interest in Nvidia hardware, Huang believes that spending on accelerated computing will become a sustainability story. In his keynote at Computex 2023, Huang made the case that using GPU acceleration to train your large language model (LLM )will allow users to complete the work more quickly and use less energy.

His specific example used a $10 million spend as an example. Previously, he pointed out, that money would buy 960 CPU-based servers, and to train a single LLM, would require 11 GW of power. Spending that same money today, would buy just 48 GPU-based servers, but that using one-third the energy, they could train 44 LLMs. To just train a single LLM, he suggested ,you could use two GPU-based servers, spending only $400,000, just 4% of the previous expense for the same result. The space saving and reduction in power and cooling need to provide comparable results is clear.

The Evolution of Accelerated Computing

Nvidia's $7.2 billion in first-quarter sales included $4.3 billion in its data center segment, up 18% from the previous quarter. So it's no surprise that Huang is bullish on the sector. In assessing Nvidia's vision for the data center, it's helpful to understand acceleration technology and its evolution.

Accelerated computing is not a new concept. The original idea, of offloading specific workloads to purpose-built hardware, from custom ASICs and FPGAs to dedicated server and networking devices, spread fairly rapidly through the industry, as the advantages were obvious. From desktops to servers, technologies such as GPU acceleration were adopted and applied, speeding up everything from gameplay to video editing to 3D rendering.

Data centers have long seen storage and networking accelerators added as part of the IT infrastructure, from dedicated network performance gateways to all flash array storage becoming standard. And then came machine learning and training, areas where GPUs excelled in rapid manipulation of the necessary data.

Nvidia is a pioneer in accelerated computing, having released its first GPU accelerator in 1999 to assist 3D rendering, moving the tasks from only the CPU to let the GPU help out. Over the next two decades, GPU acceleration became the standard for scientific computing, with the fastest supercomputers in the world combining CPUs and GPUs to now reach exaflop (one quintillion floating- point operations per second) performance levels.

Reimagining the Entire Infrastructure

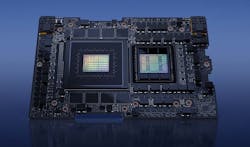

For the accelerated data center, Nvidia is looking at a unified infrastructure that includes their Grace CPUs, or Grace Hopper Superchip, their A100 and H100 GPUs, in various packages, and their BlueField data processing units, designed to provide a software defined, hardware accelerated infrastructure for networking, storage, and security services.

At the Nvidia GTC 2023, earlier this year, the company put a stake in the ground, announcing everything from their DGX deep learning supercomputer, and it’s availability as the DGX Cloud via public cloud providers, to its own Omniverse Cloud, platform-as-a-service, as well as the underlying hardware, from the Grace Hopper Superchip, which combines the Grace CPU and Hopper GPU architectures in a single package, to the third-generation BlueField DPUs and their deployment in the Oracle Cloud Infrastructure. The clear goal is building unified data center architectures that address the demands of high performance and AI computing.

These products and announcements certainly fit the trends we have been reporting on. The accelerated data center, be it Nvidia’s vision or a competitor, with a focus on performance and efficiency, both in workload processing and energy, is certainly a direction that the industry will be taking. But none of this happens on its own. The need continues for a solid supporting infrastructure addressing space, power, and cooling in effective, efficient, and sustainable ways.

For an entertaining view of the integrated and accelerated Nvidia data center and a 3D rendered Jensen Huang, check out this video