Nvidia is ramping up its efforts to make generative AI a useful tool, both for service providers and users. At this week’s Nvidia GTC Developer Conference, the company's focus on AI is clear, whether the discussion is about hardware or software, cloud services or on premises.

From algorithm improvements in computational lithography that can make a 40-fold improvement in speed, to an on-demand supercomputer cloud designed for AI, to the availability of foundational technologies that will allow users to make generative AI work for them, the range of announcements with an AI focus is impressive.

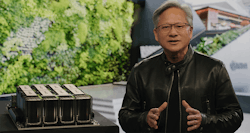

"Accelerated computing and AI have arrived," said Jensen Huang, CEO of Nvidia, in his keynote presentation. Huang noted the impact of the November release of ChatGPT, calling it "the AI heard around the world."

"The iPhone moment of AI has started," said Huang. "The impressive capabilities of generative AI create a sense of urgency for companies to re-imagine their products and business models."

To give every enterprise access to an AI supercomputer, Nvidia is deploying the DGX Cloud, dedicated clusters of DGX AI supercomputing paired with Nvidia’s AI software and accessible to customers through a web browser. The initial rollout is in the Oracle cloud, but Nvidia promises that other public cloud services, including Google and Microsoft, will soon be making the DCX AI supercomputing on-demand service available.

Build Your Own AI Tools

These capabilities aren’t being released in a vacuum. Also announced was the AI Foundations Generative AI Cloud Services, three services that allow customers to build proprietary generative AI using their own data sets. The three services are:

- NeMo – a language service

- Picasso – image, video, and 3D modeling service

- BioNeMo- an existing cloud service for biology that has now been upgraded with additional models

NeMo and Picasso are running on the DGX Cloud and offer simple APIs to allow customer developers to build inference workloads, which once ready for deployment, can be run at scale. Customers can build customized large language models by adding specific knowledge, teaching functional skills and more closely defining the necessary areas of focus for the generative AI. The existing models available on the service, many of which are GPT-like, according to Manuvir Das, VP of Enterprise Computing, range up to more than half a trillion parameters, and will be regularly updated with new training data .

Nvidia uses the example of bio-technology company Amgen. Kimberly Powell, Nvidia VP of Healthcare, said Amgen was able to use the BioNeMo services to customize five proprietary and protein prediction models with their own data, bringing the training time down from 3 months to four weeks.

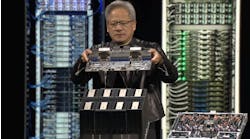

Bare Metal GPUs on a Cloud Near You

Beyond the software services, Nvidia is also partnering with major cloud providers to offer bare-metal instances of their H100 GPUs. For example, in the Oracle cloud, Oracle technology built on top of Nvidia networking and GPUs can scale up to 16,000 instances. Not to be outdone, Amazon is announcing their EC2 ultra cluster of P5 instances also based on the H100 GP, with the capability of scaling up to 20,000 GPUs using their EFA technology. And, of course, Meta doesn’t want to be left out, announcing that they are building their next AI supercomputer and deploying the Grand Teton H100 systems to their data centers.

Data centers are likely to be impacted by a couple of hardware announcements. The first is the new L4 universal accelerator for efficient video, AI, and graphics. It’s a single slot, low profile GPU that can be fit into any server with available space, turning it in to an AI server. The card is specifically optimized for AI video with new encoder and decoder accelerators giving performance 120 times faster than a standard CPU, while using 99 percent less energy. It’s four times faster than the previous-generation accelerator and is central to a new partnership announced with Google where Google will provide early access to the L4 in their cloud. Additional partners will be announced during the week for L4 availability.

While not a new product, the Nvidia Bluefield-3 is now in full production, a 400 Gbps network accelerator. This new DPU is capable of offloading, accelerating, or isolating workloads across cloud, HPC, enterprise, and accelerated AI use cases, according to Kevin Deierling, VP of Networking. It also uses the DOCA 2.0 programming model which offers a broad ecosystem to run software platforms from Canonical, to Cisco, to Dell. Once again, the Oracle cloud infrastructure is early to the dance using the Bluefield-3 DPU to help provide large scale GPU clustering. Deierling also tells us that the efficiencies gained over the previous generation Bluefield2 allow eight times the number of virtualized instances to be supported.

For customers who like running private instances of the Microsoft Azure cloud, Nvidia will be releasing in the second half of the year the availability of their Omniverse Cloud to be available as a platform-as-a-Service. If you are unfamiliar with the Omniverse platform it is designed connect existing tools and workflows to a platform that can add additional capabilities.

There is a lot to digest just from these early announcements and while we can expect additional Nvidia a partner announcements during the conference it is clear that the focus is on enabling and delivering AI everywhere.