NVIDIA: Volta GPUs Now Available Across All Major Clouds, OEMs

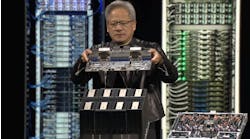

In a presentation at the SC17 supercomputing conference, NVIDIA founder and CEO Jensen Huang said that advanced GPUs based on the company’s Volta architecture are now available through every major computer maker and chosen by every major cloud to deliver artificial intelligence and high performance computing.

The breadth of adoption of its GPU (graphics processing unit) technology is a milestone as the company contends for high performance computing (HPC) business against chipmaking market leader Intel and a growing ecosystem of vendors offering specialized hardware to support the robust growth of technical computing. This week’s SC17 event in Denver provides a showcase for the latest advances in technology for the HPC sector, especially for workloads related to artificial intelligence (AI).

“One of the most important elements of any computing architecture is accessibility,” said Huang. “If it’s not literally everywhere, developers are reluctant to adopt that architecture. With the reach of Volta, developers everywhere are accelerating their adoption of our architecture.”

Huang said that leading cloud providers using Volta-based cloud services include Amazon Web Services, Microsoft Azure, Google Compute Platform, Oracle Cloud, Baidu Cloud, Alibaba Cloud and Tencent Cloud. On the OEM side, Dell EMC, Hewlett Packard Enterprise, Huawei, IBM and Lenovo have all announced Volta-based offerings for their customers.

“Volta is the world’s most powerful platform for AI and HPC, and will allow the world’s top minds in scientific research to push the limit on what’s possible in areas like drug discovery, alternative fuel sources and predicting natural disasters,” said Huang. “With Volta now in data centers and clouds around the world, a new wave of innovation is underway that will have an incredible impact across society.”

Parallel Processing Powers AI Gains

NVIDIA’s graphics processing technology has been one of the biggest beneficiaries of the rise of specialized computing, gaining traction with workloads in supercomputing, AI and connected cars. NVIDIA has been investing heavily in innovation in AI, which it sees as a pervasive technology trend that will bring its GPU technology into every area of the economy and society.

In artificial intelligence (AI), computers are assembled into neural networks that emulate the learning process of the human brain to solve new challenges. It’s a process that requires lots of computing horsepower, which is why the leading players in the field have moved beyond traditional CPU-driven servers.

NVIDIA was founded in 1993, and its graphics processing units (GPUs) quickly became an essential tool for gamers yearning for more horsepower. The company’s GPUs worked with CPUs, but took a slightly different approach to processing data. A CPU consists of a few cores optimized for sequential serial processing, while a GPU has a parallel architecture consisting of hundreds or even thousands of smaller cores designed for handling multiple tasks simultaneously.

Parallel processing also turned out to be important in the burgeoning field of artificial intelligence, helping NVIDIA quickly gain a foothold.

NVIDIA, Intel Tout Gains in TOP500

At SC17 NVIDIA also touted gains on the latest version of the twice-yearly TOP500 supercomputer list, on which it 34 new GPU-accelerated systems, bringing its total on the list to 87. The company also increased its total petaflops on the list by 28 percent.

That still leaves NVIDIA well behind Intel, whose chips are found in 471 of the TOP500 systems, including all 137 of the new systems on the new list. There is crossover between the NVIDIA and Intel gains, as many HPC systems use an Intel CPU in tandem with an NVIDIA GPU as an accelerator. Intel noted that new processors from its Intel Xeon Scalable family powered 18 supercomputers on the TOP500.

While it touted the gains for its Xeon Scalable products, Intel’s HPC efforts are focused on the coming rollout of its Nervana Neural Network Processor chips, which are tailored for artificial intelligence workloads. The new AI chips are based on technology Intel acquired in its 2016 purchase of startup Nervana.

The two companies will continue to battle for mindshare and market share as more companies adopt HPC-style technical computing at scale to handle AI and “Big Data” analytics workloads. At SC17, NVIDIA is making its case with a new report from analyst firm Interesect360 Research, noting NVIDIA ‘s importance to the future of scientific computing, as all of the top 15 and 70 percent of the top 50 HPC applications are now GPU accelerated.

“GPU computing has reached a tipping point in the HPC market that will encourage continued increases in application optimization,” wrote Addison Snell and Laura Segervall of Intersect360.