Data Center World Experts Drill Down for AI Facility Design and Construction Case Study

Washington, DC -- At last week's Data Center World (April 15-18), Luke Kipfer, Vice President of Data Center Development and Construction for PowerHouse Data Centers, and Christopher McLean, Principal at Critical Facility Group, joined to present on the topic of a "Case Study in Design and Construction of AI Data Centers."

Both Kipfer and McLean are known for their wealth of hands-on, boot-on-the-ground experience in managing the design and construction of multiple facilities meant explicitly for AI use cases. The co-presentation shared their knowledge from such data center case studies.

One of the talk's premises was that while many organizations are talking about preparing their data centers for AI, or perhaps even entering the design stage, AI data center deployments are still relatively uncommon. And they involve a daunting scope of long-term demands whose effect might still be largely unknown.

Meanwhile, a data center sector that has been tested in meeting the needs of traditional IT deployments is now leveling up to the challenges of AI making its way to the marketplace in many, if not most, industries.

Providing a well-rounded look at challenges and solutions for the next generation of data center formation, the session with Kipfer and McLean delved into how AI applications not only require more power, but use power in a different way -- which has major ramifications for other aspects of a data center build.

The talk explored how those aspects play out through looking at real-world examples.

The goal of the presentation, as characterized by the Data Center World program, was to instruct attendees on how to think about power requirements for AI racks, and to expose ideas for alternative cooling systems serving AI use cases.

Key questions for Kipfer and McLean included: How much more power density do AI racks require? How does increased power density affect cooling design? How is the cabling of GPU clusters different? What does an AI-enabled system do to possible layout configurations?

Other key areas of interest included: how larger cooling systems affect available space in an AI data center; and AI considerations for existing data center renovations, compared to new, ground-up data center builds.

Inside the Room

The presentation discussed the challenges and considerations around deploying GPU clusters and AI, including the need for long-term planning and purposeful data center builds.

Conversation also touched on the shift towards industrial busway and the complexities arising from higher power density, as well as ongoing R&D efforts to develop cooling solutions for increasing heat rejection needs.

McLean and Kipfer emphasized the need for comprehensive planning to address the evolving requirements of modern data center buildings.

Kipfer introduced AI as the next wave of compute, emphasizing its fundamental change in how data centers and infrastructure impact everyday lives. He said his focus was imparting tips on building purpose-built AI data centers and sharing lessons learned.

Kipfer said that in an industry climate with so much curiosity about how AI is going to impact data center design and construction, his company has done some retrofits of accessing facilities, but has increasingly focused on new, purpose-built AI data centers with a focus on process and project execution.

He touched on how user needs intersect with site location, while comparing the pros and cons of fresh purpose-built facilities vs. retrofitting existing data centers.

Kipfer also emphasized the challenges related factors such as to utility power availability, lead times for equipment, and the need for long-term planning due to the intense demand for deploying GPU clusters and AI.

He outlined site selection processes and key decision-making elements in opting for a purpose-built data center build, with an eye toward the need for speed and efficiency in deployment.

Power Density Challenges

McLean then addressed the shift towards industrial busway and the challenges of delivering high power densities in a row, considering weight distribution and structural reinforcement.

He noted, "We're a replacement industry in some ways - Powerhouse actually just knocked down the original AOL headquarters and data center to give birth to an AI data center; Compass in Chicago knocked down Sears headquarters - Sears now just lives on the internet, and we're rebuilding for future data center infrastructure."

McLean addressed the complexities arising from higher power density, including the nuances of dealing with hardwired appliances, higher voltages, fault currents, and the introduction of new risks that require unique engineering solutions.

He then transitioned to discussing mechanical challenges in data centers, mentioning ongoing R&D efforts to develop cooling solutions for increasing heat rejection needs, "even before problems fully manifest."

AI Growth Leapt from a Long Cloud Plateau, Then Enters Liquid Cooling

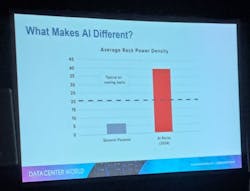

McLean noted how slow growth in power density over a decade in the industry has suddenly leapt into significant growth in power design for data centers, with increased compute capabilities.

"If I rewind back to 2007-ish, when I really started to get deeper into power design for data centers, we're doing a 1 kW rack every 8 sq.ft. Now we're doing above that every three and a half inches high."

"To put it in perspective of how [big] this shift is in 2023-2024, we're not growing by 20% anymore. We grew by 600 percent. To double down on that is another fascinating thing: how in all those gains, 20% additional power consumption yielded some sort of additional heat projection."

"But at the same time we were seeing like 6x performance growth in the compute side of things really quietly. Now with GPUs and the AI toolsets, it would have blown right off the top of the page through that black curtain if you wanted to see that chart. It's truly a remarkable time."

Kipfer then emphasized the industry shift toward liquid cooling over air cooling in high-density AI environments, highlighting challenges and considerations surrounding elements of heat absorption, power adaptation, and mechanical balancing.

"Traditionally, talking about pure air-side cooling in a room at a max. amount around 20 kW - obviously there are a lot of deployments there," he said. "But now we're having some higher densities, some HPC needs where we blow way past what we can do from from an air cooling perspective."

He added, "In design, we're seeing a lot of directions. We're seeing a lot of people talk about immersion and not necessarily deploying immersion at scale."

McLean too highlighted the resurgence of liquid cooling, including the civil engineering implications of heavy equipment, and the need for comprehensive planning to address the evolving requirements of modern data center buildings.

"The most interesting time that I've had as an electrical engineer is taking the transformer out of a UPS and calling the system more efficient, whereas mechanical people have had all of this fun. You're talking about chip-level cooling, cold plate cooling, immersion cooling, in-row cooling, sidecar cooling, end-row CDUs and rack CDUs, all the manifolds going inside the rack."

He continued, "It's a great time to be a mechanical engineer - sort of the sky's the limit on the products that are coming out."

"If you think about this from a density standpoint, we're talking about 45 kW racks now, but we're literally in developing environments right now at 2-3x that. From a rack standpoint, it's really safe to say that the cooling technology that we need a year from now doesn't even exist yet. Or at least that commercially, we're not even aware that it exists yet. So there: It's an unprecedented time for innovation in the data center industry."

Kipfer and McLean went on to discuss the challenges and considerations of converting an existing facility for AI use, including mechanical, electrical infrastructure needs, connectivity requirements, structural implications (such as ceiling heights and floor loading), and potential solutions for heavy, dense cabinet deployments at scale.

PowerHouse's Kipfer is leading the electrical mechanical design team for the company's latest purpose-built data center projects set to go online in 2025.

About the Author

Matt Vincent

A B2B technology journalist and editor with more than two decades of experience, Matt Vincent is Editor in Chief of Data Center Frontier.