At last week’s Microsoft Ignite came the official announcement that Microsoft was getting ready to launch high-performance server CPUs and their own, LLM-optimized AI processors.

With the addition of this hardware, along with existing and planned support for popular CPU and AI solutions, Microsoft believes that customers using the Azure stack will be able to get maximum flexibility with their investment, tailoring their demands to address power, performance, sustainability or cost.

“Software is our core strength, but frankly, we are a systems company. At Microsoft we are co-designing and optimizing hardware and software together so that one plus one is greater than two,” said Rani Borkar, corporate vice president for Azure Hardware Systems and Infrastructure (AHSI). “We have visibility into the entire stack, and silicon is just one of the ingredients.”

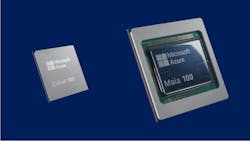

The two new hardware chip -level products are the Maia 100 AI accelerator and the Azure Cobalt 100 ARM CPU designed to run general-purpose cloud workloads. Slides shown at Ignite highlighted that Microsoft believes the Cobalt CPU will be the fastest ARM server CPU in the cloud market.

Tell Me About the AI Accelerator

AI acceleration is the hot topic this year, and this new hardware announcement from Microsoft is sure to stoke the fire.

Brian Harry, a Microsoft technical fellow leading the Azure Maia team, stated that it’s not simply the performance of the AI accelerator, it’s the level of integration with the Azure stack that will enable customers to get the most in terms of performance and efficiency.

At the time of the announcement, Sam Altman, then CEO of OpenAI, the generative AI pioneer in whom Microsoft has invested at least $12 billion, commented that, “We were excited when Microsoft first shared their designs for the Maia chip, and we’ve worked together to refine and test it with our models. Azure’s end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers.”

Microsoft has had the advantage of feedback from OpenAI and announced that OpenAI had worked with Microsoft to co-design the AI infrastructure for Azure, refining and testing the end-to-end solution with OpenAI’s models.

Of course, Altman was fired by the board of OpenAI on the afternoon of November 17th, not going unemployed for long as Microsoft announced by the morning of Monday the 20th that he had been hired by them to lead a new artificial intelligence team. This happened after they attempted to get him reinstated as the CEO of OpenAI and were unable to do so at that time.

However, per a report in The Guardian, as of today, Sam Altman is set to return as CEO of OpenAI.

Microsoft Jumps on the Arm Bandwagon

While it is not surprising that Microsoft chose the ARM architecture for its in-house designed server CPU, the presumption is that it will be optimized for the way Microsoft wants it to work within the Azure stack.

Microsoft will be able to minimize any compromises between the hardware and how they want Azure to work in any given situation, an issue that always needed to be addressed when Azure was running on commodity hardware solutions.

At the announcement, Microsoft showed images of custom-designed racks for using their AI accelerator that addressed the specific needs of the product. It is likely that options such as liquid cooling and advanced thermal management have been applied to these new designs.

The announced plan is to run these new hardware components in Microsoft's existing data centers.

What About the Competition?

Microsoft made two significant announcements at Insight regarding their primary hardware competitors and Azure.

They previewed the new Nvidia NC H100 v5 Virtual Machine Series built for NVIDIA H100 Tensor Core GPUs, which will be focused on mid-rage AI training and generative AI inferencing, along with announcing that there will be support for Nvidia’s newly announced NVIDIA H200 Tensor Core GPU added to the Azure platform next year.

AMD isn’t being left out of the mix; the announcement included the addition of the AMD MI300X accelerated VMs to Azure in the form of the ND MI300 virtual machines, aimed at high-range AI model training capabilities and inferencing.

Why so many choices? Borkar tells us, “Customer obsession means we provide whatever is best for our customers, and that means taking what is available in the ecosystem as well as what we have developed. We will continue to work with all of our partners to deliver to the customer what they want.”

Keep pace with the fast-moving world of data centers and cloud computing by connecting with Data Center Frontier on LinkedIn, following us on X/Twitter and Facebook, and signing up for our weekly newsletters using the form below.

About the Author