As Rack Densities Rise, Liquid Cooling Specialists Begin to See Gains

As artificial intelligence boosts demand for more powerful hardware, are data centers turning to liquid cooling to support more high-density server racks? The picture is mixed, as end users report a gradual increase in rack density, and there have been some large new installations for technical computing applications. Hyperscale operators, who are the largest potential market, continue to remain wary about wholesale adoption of liquid cooling.

Some cooling specialists report growing demand, and are investing in additional production capacity. But others say demand for liquid cooling from the data center sector remains “lumpy,” and are shifting their focus to water-cooled for the fast-growing eSports market.

We’ve been tracking progress in rack density and liquid adoption for years at Data Center Frontier as part of our focus on new technologies and how they may transform the data center. The rise of artificial intelligence, and the hardware that often supports it, is reshaping the data center industry’s relationship with servers.

New hardware for AI workloads is packing more computing power into each piece of equipment, boosting the power density – the amount of electricity used by servers and storage in a rack or cabinet – and the accompanying heat.

Almost 70 percent of respondents in a recent Uptime Institute survey of enterprise data center users report that their average rack density is rising. Uptime notes that the increase “follows a long period of flat or minor increases, causing many to rethink cooling strategies.” Uptime Intelligence says it regards this as “a medium- to long-term trend.”

The AFCOM State of the Data Center survey for 2019 also cited a trend towards denser racks. Twenty seven percent of data center users said they expected to deploy high performance computing (HPC) solutions, and another 39 percent anticipated using converged architectures that tend to be denser than traditional servers.

Most servers are designed to use air cooling. Google’s decision to shift to liquid cooling with its latest hardware for artificial intelligence raised expectations that others might follow. Alibaba and other Chinese hyperscale companies have adopted liquid cooling, and Microsoft recently indicated that it has been experimenting with liquid cooling for its Azure cloud service. But Microsoft has decided to hold off for now, and Facebook has instead opted for a new approach to air cooling to operate in hotter climates.

At the recent DCD Enterprise event in New York, several panelists said they believe the widespread interest in AI will lead to more high-density computing.

A key question is where those high-density racks will reside. Switch has long been a leader in high-density computing, with companies seeking out its Las Vegas campus to handle workloads that need lots of cooling.

“Most of our customers are involved in AI,” said Eddie Schutter, the Chief Technology Officer at Switch. But Schutter said that most continue to use standard x86 platforms and few are exceeding 20 kW per cabinet, which Switch can easily support with air cooling.

Schutter said that many companies may choose to provision data-intense AI applications through the cloud computing model, taking advantage of the data center expertise of hyperscale platforms and hosting specialists.

“I think what you will see is shared services through MSPs (managed service providers) offering AI-based leasing time.” said Schutter. “There are some use cases that make a lot of sense today, but the use cases aren’t pushing (higher densities).”

DUG McCloud

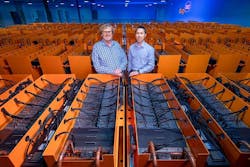

That’s the case with DUG McCloud, a new cloud service providing on-demand access to computer modeling of seismic data for energy companies, bringing new levels of precision to oil and gas exploration. The huge system from DownUnder GeoSolutions (DUG) has just gone live at the Skybox Datacenters facility in Houston’s Energy Corridor, where DUG has leased 15 megawatts of capacity.

The DUG cooling system fully submerges standard HPC servers into tanks filled with a dielectric fluid. The project will deploy more than 720 enclosures using the DUG Cool liquid cooling system, which reduces the huge system’s energy usage by about 45 percent compared to traditional air cooling, the company said.

DUG is one of the world’s largest users of Intel Xeon Phi Knights Landing (KNL) nodes, and will use more than 40,000 KNL nodes for the DUG McCloud data center in Houston, which has been dubbed “Bubba.”

DUG has leased a second data hall at Skybox, with plans in place to commence build out in late 2019. The massive system is expected to deliver 250 petaflops – more computing power than the world’s top supercomputers. Skybox has additional land available for long-term growth, which DUG believes can exceed 1 exaflop by 20121.

STULZ USA

A small group of specialists target the market for water-cooled servers. To get a sense of what’s happening in the market, let’s look at recent events for a group of them. We’ll start with STULZ USA, a subsidiary of STULZ GmbH, one of the world’s largest suppliers of air conditioning.

David Meadows (center) of STULZ USA discusses trends in liquid cooling at the recent DCD Enterprise NY conference. At left is Joerg Desler, President of STULZ Air Technology Systems. (Photo: Rich Miller)

At DCD Enterprise, STULZ USA showcased its Micro DC, a single-cabinet modular micro data center that can support up to 48 rack units.

The STULZ USA Micro DC in a liquid cooling configuration, with a coolant distribution unit in the bottom of the rack. (Photo: Rich Miller)

Stulz has partnered with CoolIT Systems to integrate a direct liquid cooling-to-the-chip (DCLC) system that uses passive cold plates that can cool any combination of CPU, GPU and memory. The cabinet can include an on-board rack-mounted coolant distribution unit (CDU) housed in the bottom of the 19-inch rack.

David Meadows, the Director of Industry Standards and Technology at STULZ USA, said the Micro DC was ideal for deploying high-density racks of up to 100 kW in environments that would test the limits of air cooling.

“To me, it is totally logical that everything is liquid cooled,” said Meadows. “There is a little higher up-front cost, but we can use less space and have a lower operating cost. We are absolutely convinced these are the concepts for the future.”

STULZ USA has expanded its manufacturing capacity with a new plant in Dayton, Ohio, and also recently signed an OEM agreement with Trane that should expand adoption of STULZ USA’s data center equipment.

CoolIT Systems

CoolIT Systems is also adding manufacturing capacity to meet demand. Earlier this year, Calgary-based CoolIT announced an investment by Vistara Capital Partners to help the company expand production to service data center customers.

“With our order backlog expanding by 400 percent in the last year, access to additional capital is critical for continued success,” said CoolIT Systems CFO, Peter Calverley. “With the creative financing package provided by Vistara the financial foundation is in place for 2019 to be another year of significant growth in data center liquid cooling sales for CoolIT.”

In addition to its work with STULZ on the enclosure side, CoolIT partners with chip and server OEMs including Intel, Dell, HPE and Cray. CoolIT’s direct liquid cooling systems can operate with warm water cooling, reducing the need for fans and room-level cooling and air handlers.

“CoolIT Systems is at an exciting inflection point, managing a significant expansion of their business as liquid cooling is experiencing accelerated adoption by data center OEMs and operators,” said Noah Shipman, a partner at Vistara.

Chilldyne

Chilldyne is another specialist in direct-to-chip liquid cooling that is reporting growth and expansion. The Carlsbad, Calif. company is currently building out a $1 million-plus liquid cooling system for an American computer company’s Open Compute Project (OCP) servers that are going into a Department of Energy National Lab. Chilldyne says it sees “substantial opportunities” with DOE national labs, and also sees the potential for OCP systems to expand its market.

“We are seeking a $1.5 million investment to take advantage of our liquid cooling system we’re currently building that will be ready for viewing in September,” said Bob Spears, President and CEO of Chilldyne. “We’re also in discussions with several large companies that have expressed interest in making a strategic investment in Chilldyne to extend their traditional data center business into the cooling space or to take our innovative liquid cooling into adjacent verticals outside of the data center.”

Spears said Chilldyne has also lined up a business development exec with years of experience selling liquid cooling systems to server hardware OEMs and HPC customers. “Our sales push will start this Fall when our National Lab system comes online and we’ll be showcasing our negative pressure liquid cooling system integrated with our Hardware partner’s OCP server at SC18,” said Spears.

“We see Intel increasing the power of their CPUs, so the future for liquid cooling is bright,” added Steve Harrington, CTO and Founder of Chilldyne.

Asetek

While others are gearing up for data center growth, Asetek’s data center business boosted its revenue and margins in the first quarter of 2019, but still lost $1.3 million and lagged results in the company’s gaming and enthusiast PC markets.

“Data center market adoption of liquid cooling solutions takes time and is lagging our expectations despite its strong value proposition,” Asetek said. “The company has decided to discontinue segment revenue guidance until the data center business more clearly develops into a meaningful business. Though the company anticipates long-term revenue growth in this segment, Asetek’s historical investment in data center is considered sufficient at this time and therefore spending in 2019 will be scaled down from the prior year level.”

Asetek noted that the said the global high-end gaming population is expected to exceed 29 million users by 2021. representing annual growth of more than 5 percent per year. “The growing popularity of PC gaming and eSports has fueled revenue growth in recent years,” the company said. “To meet the demands of competitive gamers, the powerful machines in use today require advanced cooling for both CPUs and graphics processing units (GPUs).”

Asetek said the liquid cooling market suffers from its disjointed nature. “There is an apparent need for public standards to trigger wider data center adoption of liquid cooling,” said Asetek. “The company is participating in targeted campaigns to influence politicians and support wider understanding of the significant environmental and circular economy benefits enabled by liquid cooling.”

In 2018 the Open Compute Project (OCP) created an Advanced Cooling Solutions project, saying it is “responding to an industry need to collaborate on liquid cooling and other advanced cooling approaches.”

About the Author